Starlink User Note 253

Mark Taylor

12 September 2025

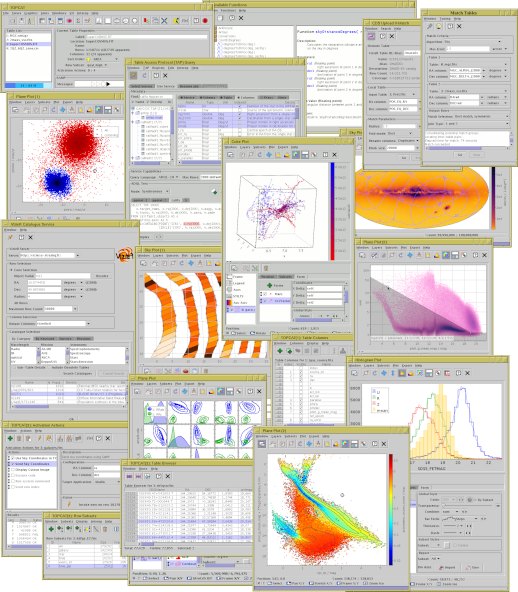

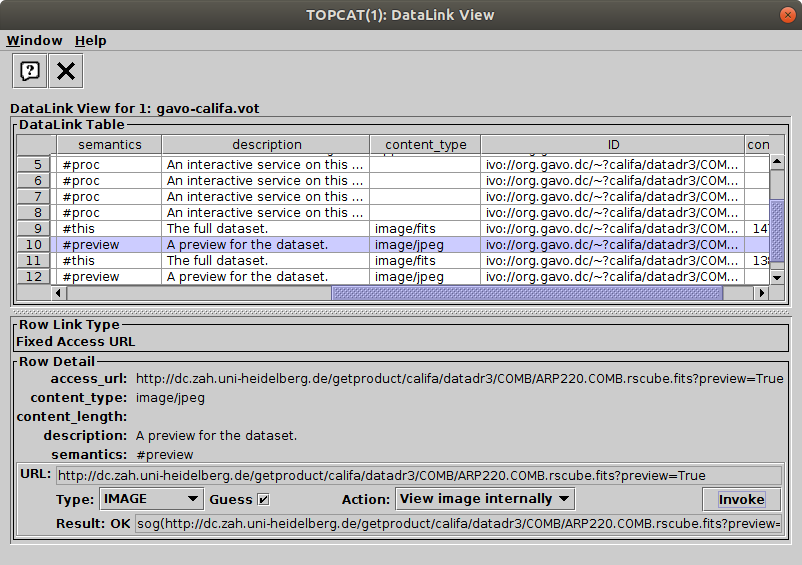

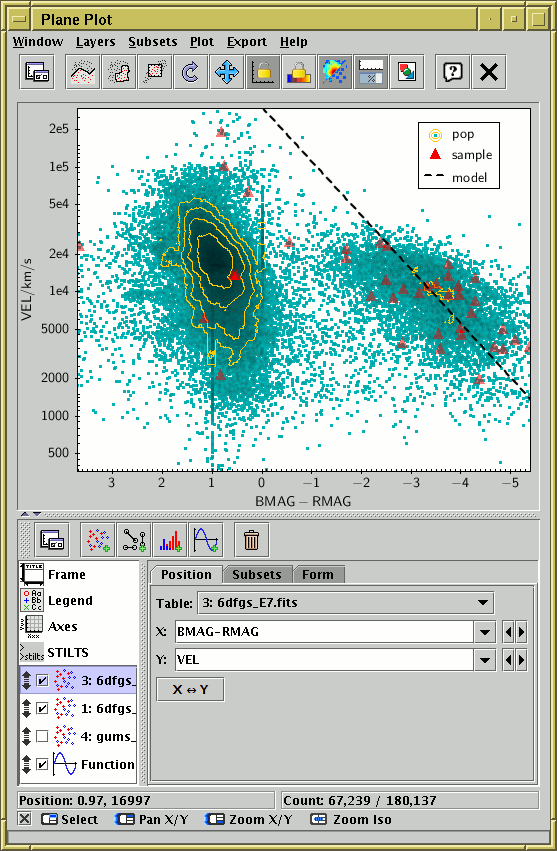

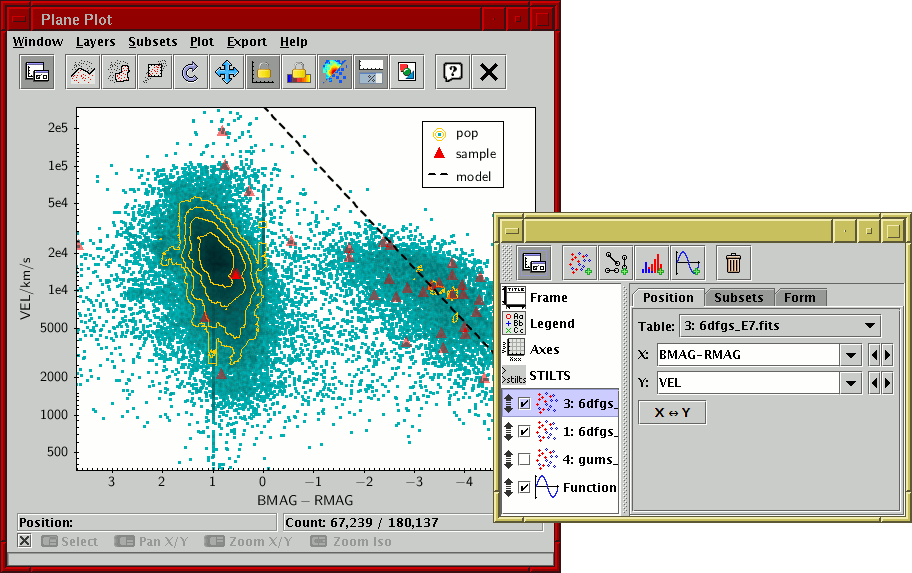

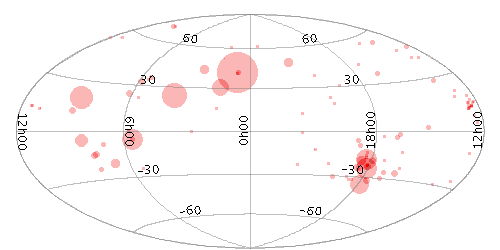

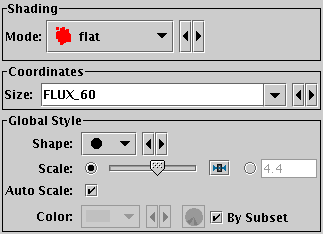

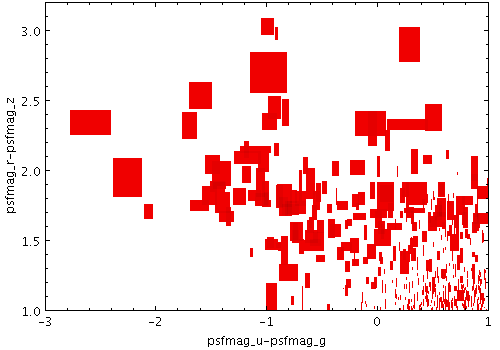

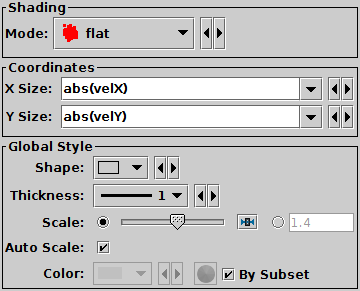

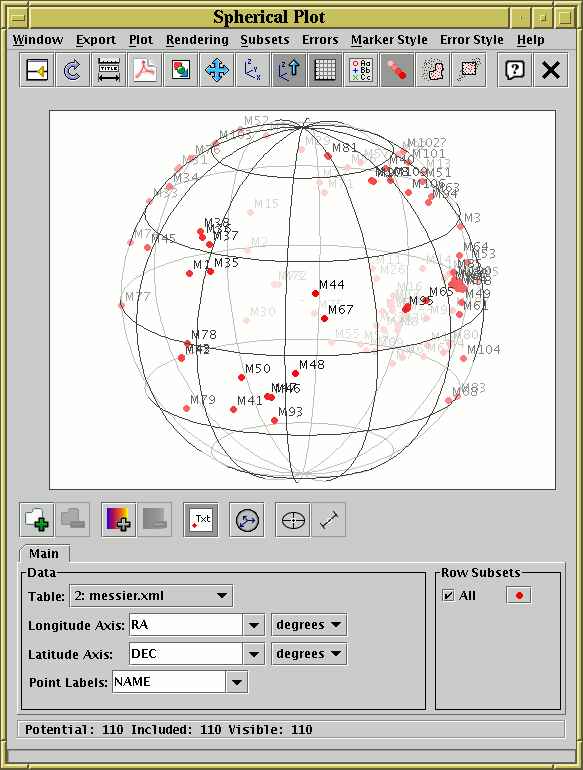

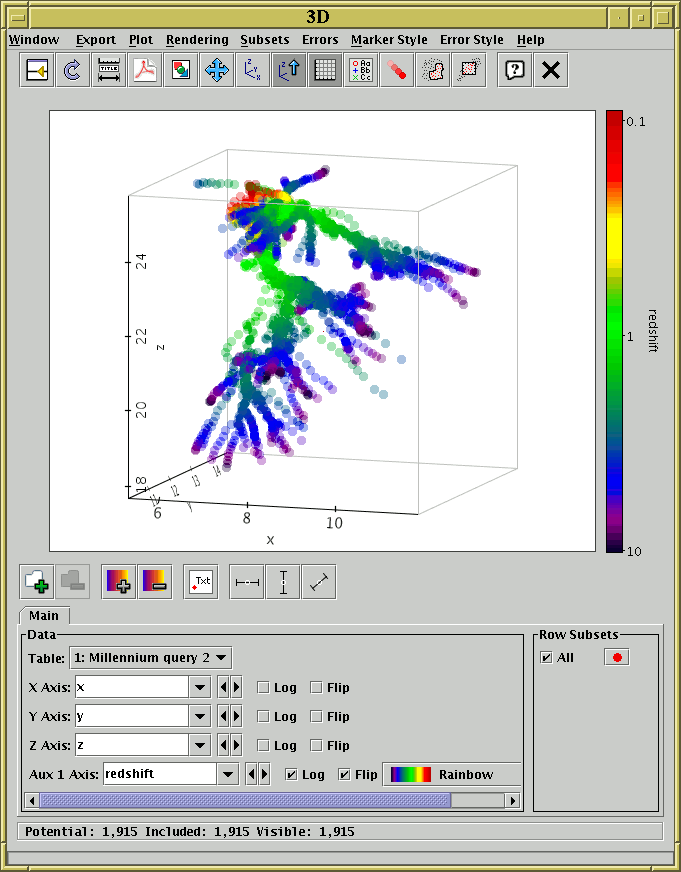

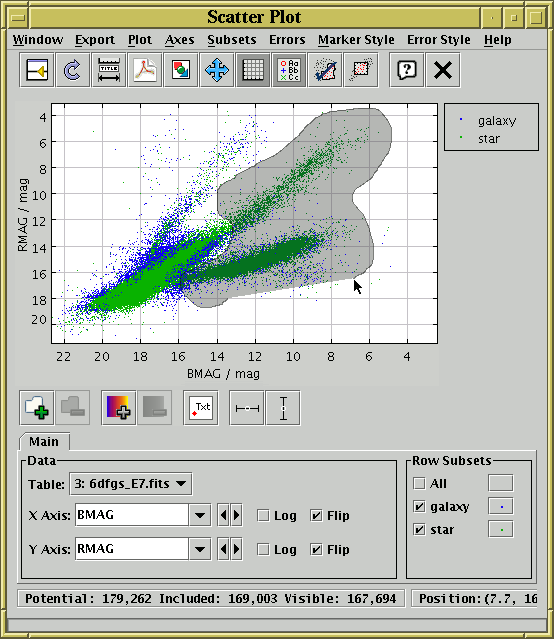

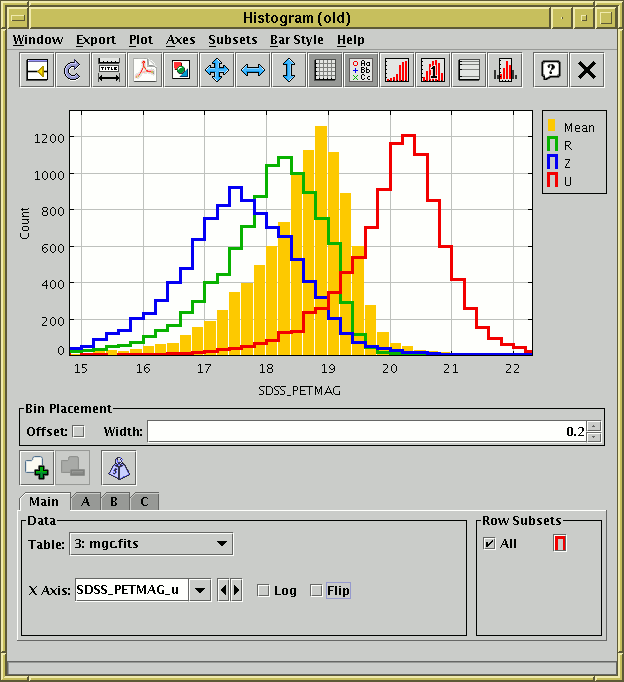

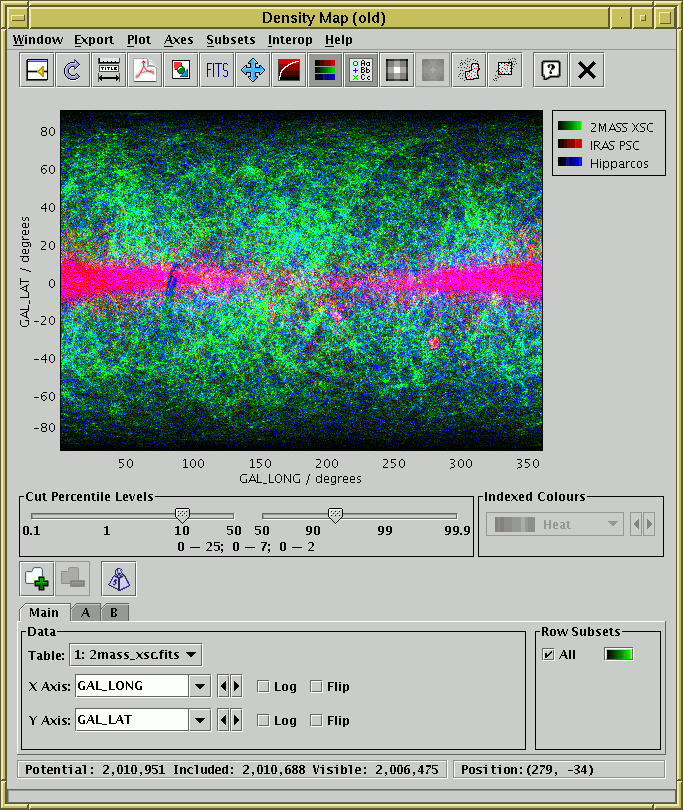

TOPCAT is an interactive graphical viewer and editor for tabular data. It has been designed for use with astronomical tables such as object catalogues, but is not restricted to astronomical applications. It understands a number of different astronomically important formats, and more formats can be added. It is designed to cope well with large tables; a million rows by a hundred columns should not present a problem even with modest memory and CPU resources.

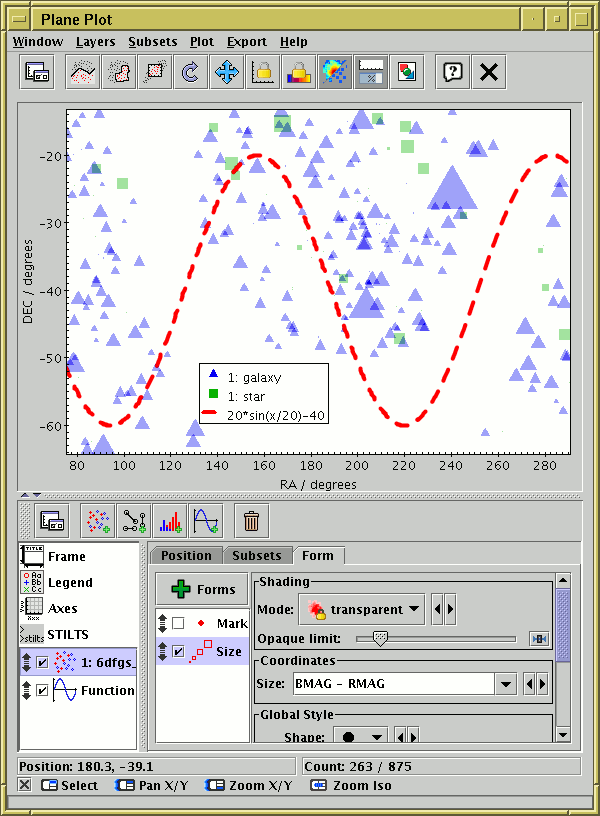

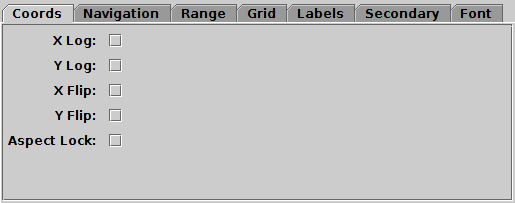

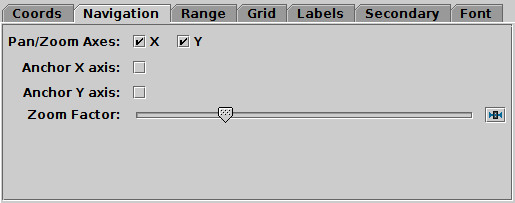

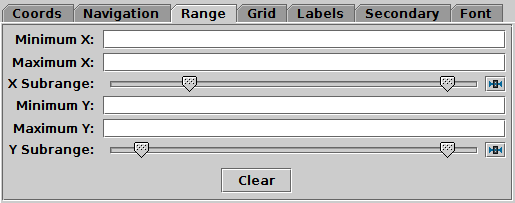

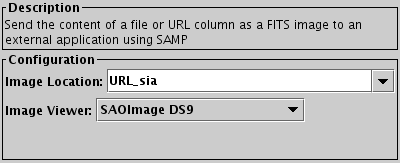

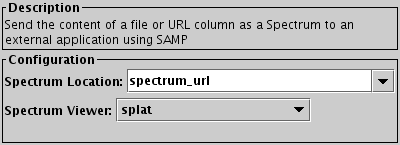

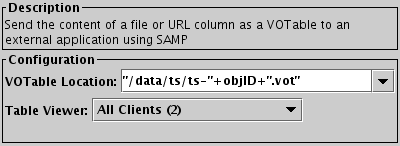

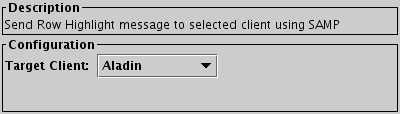

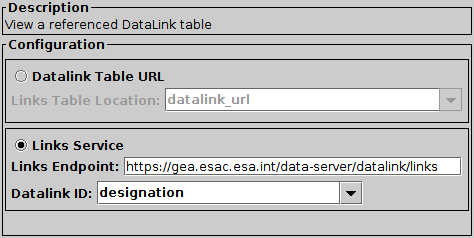

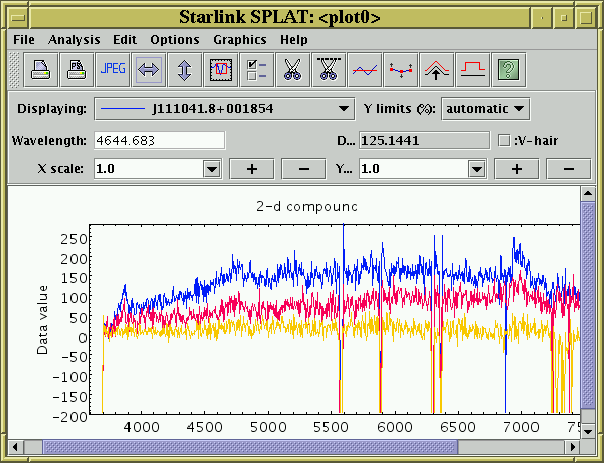

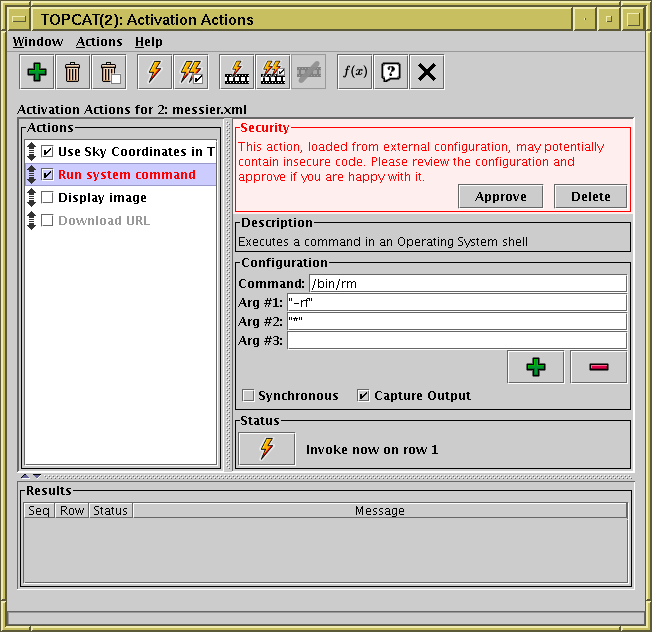

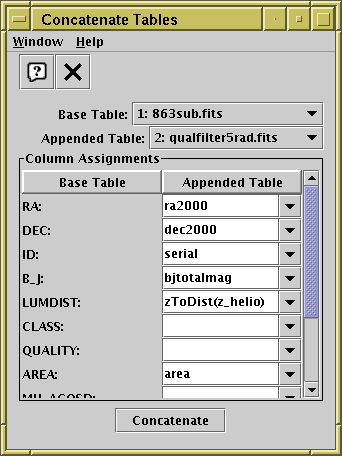

It offers a variety of ways to view and analyse the data, including a browser for the cell data themselves, viewers for information about table and column metadata, tools for joining tables using flexible matching algorithms, and extensive 2- and 3-d visualisation facilities. Using a powerful and extensible Java-based expression language new columns can be defined and row subsets selected for separate analysis. Selecting a row can be configured to trigger an action, for instance displaying an image of the catalogue object in an external viewer. Table data and metadata can be edited and the resulting modified table can be written out in a wide range of output formats.

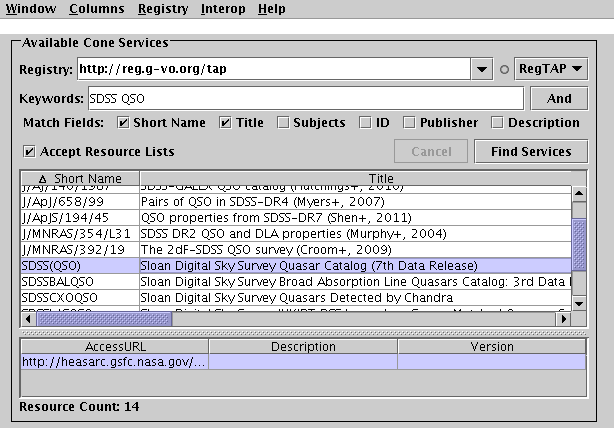

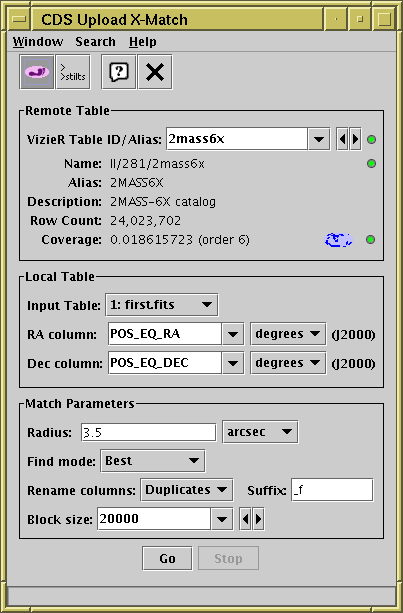

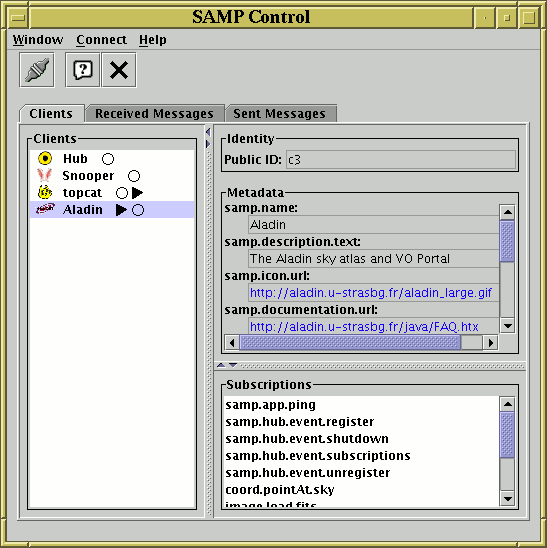

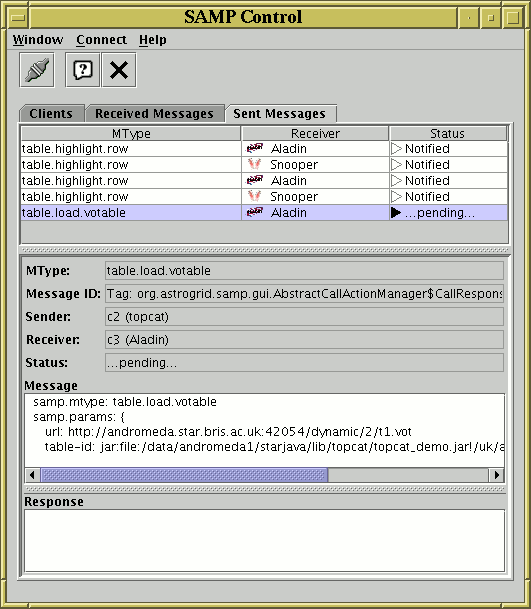

A number of options are provided for loading data from external sources, including Virtual Observatory (VO) services, thus providing a gateway to many remote archives of astronomical data. It can also interoperate with other desktop tools using the SAMP protocol.

TOPCAT is written in pure Java (except for a few optional libraries) and is available under the GNU General Public Licence. Its underlying table processing facilities are provided by STIL, the Starlink Tables Infrastructure Library.

TOPCAT is an interactive graphical program which can examine, analyse, combine, edit and write out tables. A table is, roughly, something with columns and rows; each column contains objects of the same type (for instance floating point numbers) and each row has an entry for each of the columns (though some entries might be blank). A common astronomical example of a table is an object catalogue.

TOPCAT can read in tables in a number of formats from various sources, allow you to inspect and manipulate them in various ways, and if you have edited them optionally write them out in the modified state for later use, again in a variety of formats. Here is a summary of its main capabilities:

Considerable effort has gone into making it work with large tables; a few million rows and hundreds of columns is usually quite manageable.

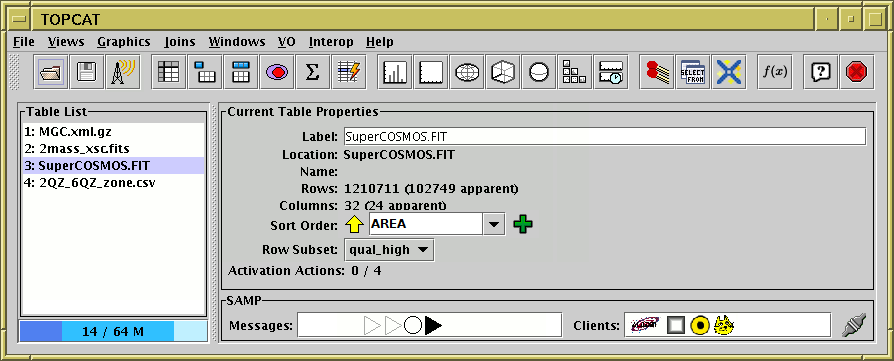

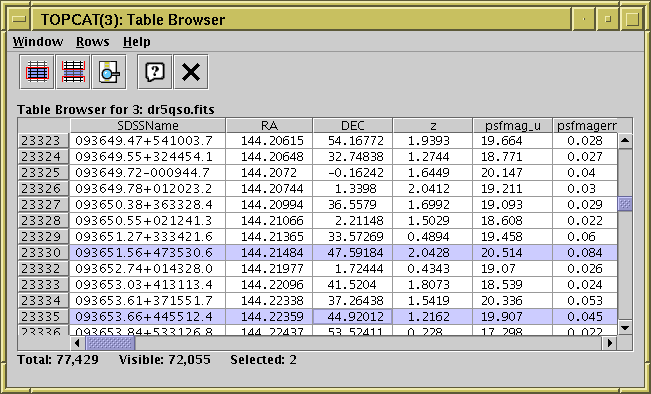

The general idea of the program is quite straightforward. At any time, it has a list of tables it knows about - these are displayed in the Control Window which is the first thing you see when you start up the program. You can add to the list by loading tables in, or by some actions which create new tables from the existing ones. When you select a table in the list by clicking on it, you can see general information about it in the control window, and you can also open more specialised view windows which allow you to inspect it in more detail or edit it. Some of the actions you can take, such as changing the current Sort Order, Row Subset or Column Set change the Apparent Table, which is a view of the table used for things such as saving it and performing row matches. Changes that you make do not directly modify the tables on disk (or wherever they came from), but if you want to save the changes you have made, you can write the modified table(s) to a new location.

The main body of this document explains these ideas and capabilities

in more detail, and

Appendix A gives a full description of all the windows which

form the application.

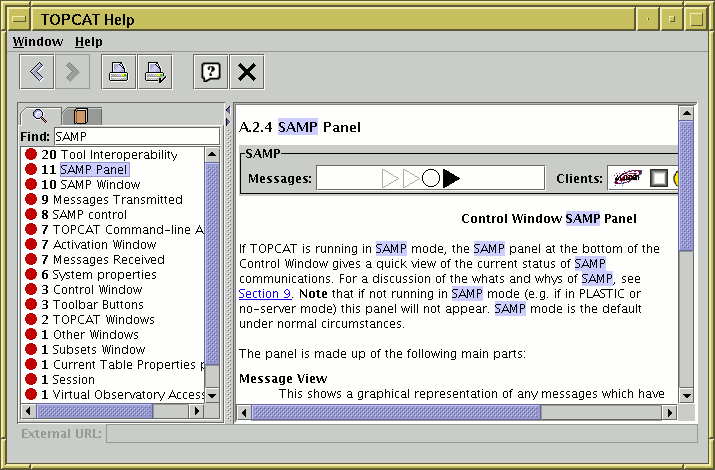

While the program is running, this document is available via the

online help system - clicking the Help ( )

toolbar button in any window will pop up a help browser open at

the page which describes that window.

This document is heavily hyperlinked, so you may find it easier to

read in its HTML form than on paper.

)

toolbar button in any window will pop up a help browser open at

the page which describes that window.

This document is heavily hyperlinked, so you may find it easier to

read in its HTML form than on paper.

Recent news about the program can be found on the TOPCAT web page. It was initially developed within the now-terminated Starlink and then AstroGrid projects, and has subsequently been supported by the UK's PPARC and STFC research councils, various Euro-VO and FP7 projects, GAVO and ESA. The underlying table handling facilities are supplied by the Starlink Tables Infrastructure Library STIL, which is documented more fully in SUN/252. The software is written in pure Java (except for some compression codecs used by the Parquet I/O handlers), and should run on any J2SE platform version 1.8 or later. This makes it highly portable, since it can run on any machine which has a suitable Java installation, which is available for MS Windows, Mac OS X and most flavours of Unix amongst others. Some of the external viewer applications it talks to rely on non-Java code however so one or two facilities, such as displaying spectra, may be absent in some cases.

The TOPCAT application is available under the terms of the GNU General Public License, since some of the libraries it uses are also GPL. However, all of the original code and many of the libraries it uses may alternatively be used under more permissive licenses such as the GNU Lesser General Public License, see documentation of the STILTS package for more details.

This manual aims to give detailed tutorial and reference documentation on most aspects of TOPCAT's capabilities, and reading it is an excellent way to learn about the program. However, it's quite a fat document, and if you feel you've got better things to do with your time than read it all, you should be able to do most things by playing around with the software and dipping into the manual (or equivalently the online help) when you can't see how to do something or the program isn't behaving as expected. This section provides a short introduction for the impatient, explaining how to get started.

To start the program, you will probably type topcat or

something like

java -jar topcat-full.jar (see Section 10 for

more detail). To view a table that you have on disk, you can either

give its name on the command line or load it using the Load

button from the GUI.

FITS, VOTable, ECSV, CDF, PDS4, feather, Parquet and GBIN files

are recognised automatically;

if your data is in another format such as ASCII (see Section 4.1.1)

you need to tell the program (e.g. -f ascii on the command line).

If you just want to try the program out, topcat -demo will

start with a couple of small tables for demonstration purposes.

The first thing that you see is the Control Window. This has a list of the loaded table(s) on the left. If one of these is highlighted by clicking on it, information about it will be shown on the right; some of this (table name, sort order) you can change here. Along the top is a toolbar with a number of buttons, most of which open up new windows. These correspond to some of the things you might most often want to do in TOPCAT, and fall into a few groups:

The menus provide alternative ways to open up these windows,

and also list a number of other, less commonly-used, options.

The Help ( ) button appears in most windows -

if you click it a help browser will be displayed showing an appropriate

part of this manual.

The Help menu gives you a few

more options along the same lines, including displaying the help

information in your usual web browser rather than in TOPCAT's (somewhat

scrappy) help viewer.

All the windows follow roughly this pattern. For some of the toolbar

buttons you can probably guess what they do from their icons,

for others probably not - to find out

you can hover with the mouse to see the tooltip,

look in the menus, read the manual, or just push it and see.

) button appears in most windows -

if you click it a help browser will be displayed showing an appropriate

part of this manual.

The Help menu gives you a few

more options along the same lines, including displaying the help

information in your usual web browser rather than in TOPCAT's (somewhat

scrappy) help viewer.

All the windows follow roughly this pattern. For some of the toolbar

buttons you can probably guess what they do from their icons,

for others probably not - to find out

you can hover with the mouse to see the tooltip,

look in the menus, read the manual, or just push it and see.

Some of the windows allow you to make changes of various sorts to the tables, such as performing sorts, selecting rows, modifying data or metadata. None of these affect the table on disk (or database, or wherever), but if you subsequently save the table the changes will be reflected in the table that you save.

A notable point to bear in mind concerns memory.

TOPCAT is fairly efficient in use of memory, but in some cases when

dealing with large tables you might see an OutOfMemoryError.

It is usually possible to work round this by using the

-XmxNNNM

flag on startup - see Section 10.2.2.

Finally, if you have queries, comments or requests about the software, and they don't appear to be addressed in the manual, consult the TOPCAT web page, use the topcat-user mailing list, or contact the author - user feedback is always welcome.

The Apparent Table is a particular view of a table which can be influenced by some of the viewing controls.

When you load a table into TOPCAT it has a number of characteristics like the number of columns and rows it contains, the order of the rows that make up the data, the data and metadata themselves, and so on. While manipulating it you can modify the way that the table appears to the program, by changing or adding data or metadata, or changing the order or selection of columns or rows that are visible. For each table its "apparent table" is a table which corresponds to the current state of the table according to the changes that you have made.

In detail, the apparent table consists of the table as it was originally imported into the program plus any of the following changes that you have made:

The apparent table is used in the following contexts:

) toolbar button,

the resulting table will contain only those ten rows.

) toolbar button,

the resulting table will contain only those ten rows.

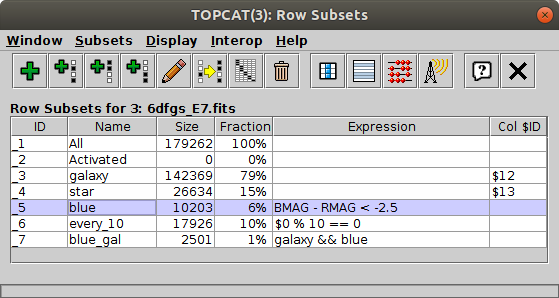

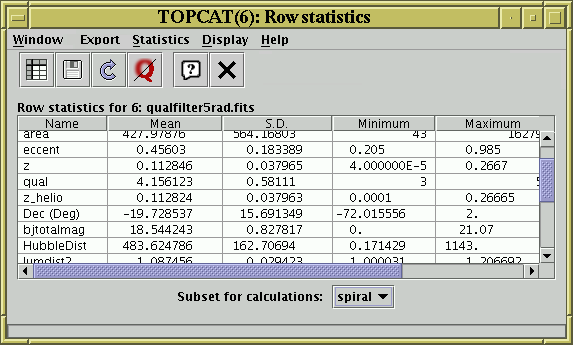

An important feature of TOPCAT is the ability to define and use Row Subsets. A Row Subset is a selection of the rows within a whole table being viewed within the application, or equivalently a new table composed from some subset of its rows. You can define these and use them in several different ways; the usefulness comes from defining them in one context and using them in another. The Subset Window displays the currently defined Row Subsets and permits some operations on them.

At any time each table has a current row subset, and this affects the Apparent Table. You can always see what it is by looking at the "Row Subset" selector in the Control Window when that table is selected; by default it is one containing all the rows. You can change it by choosing from this selector or as a result of some other actions.

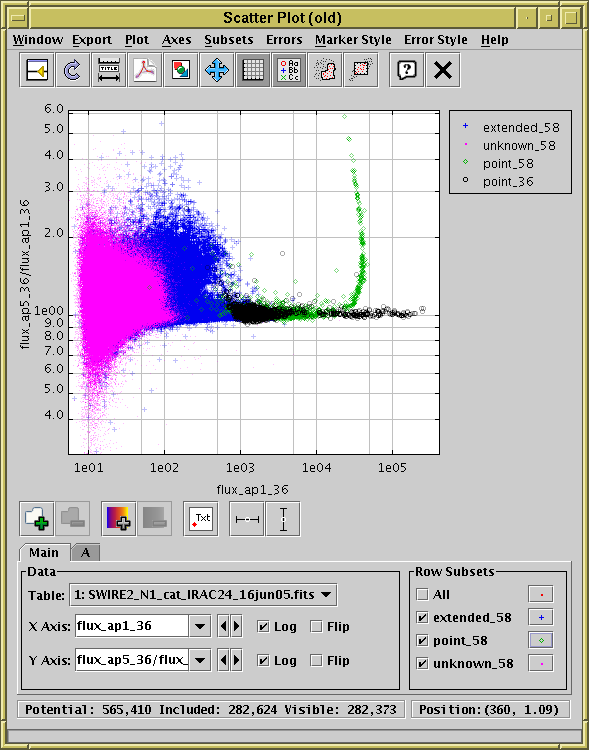

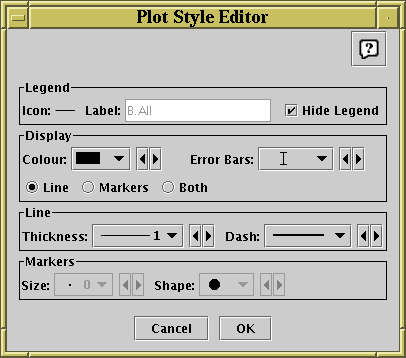

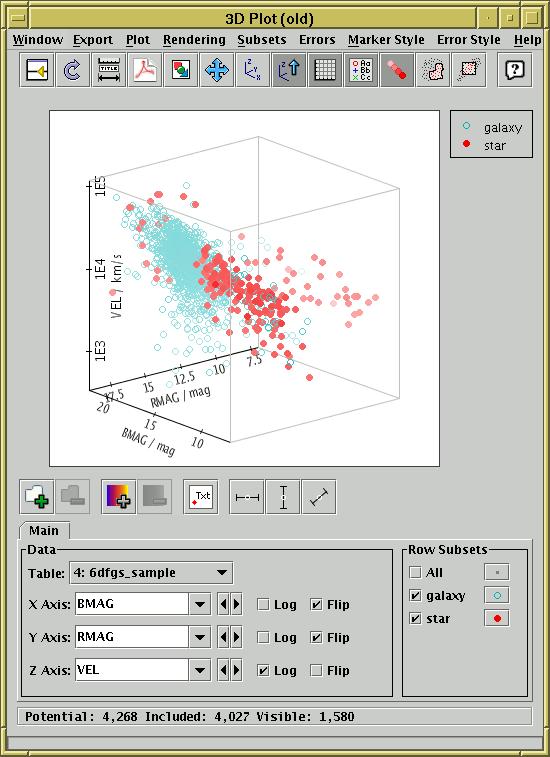

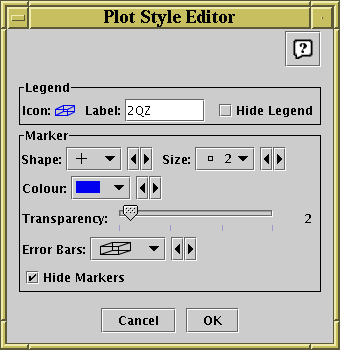

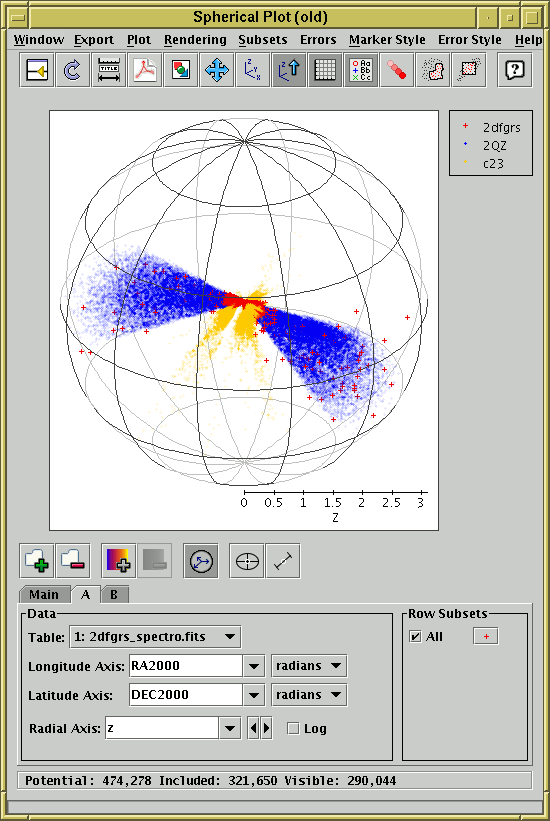

Other contexts in which subsets can be used are picking a selection of rows from which to calculate in the Statistics Window and marking groups of rows to plot using different markers in the various plotting (and old-style plotting) windows.

Tables always have two special subsets:

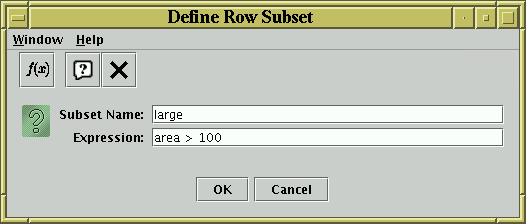

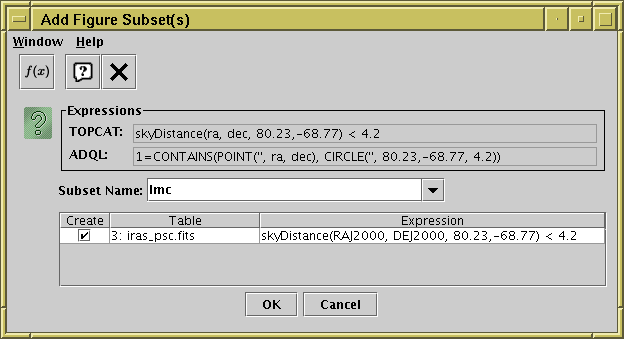

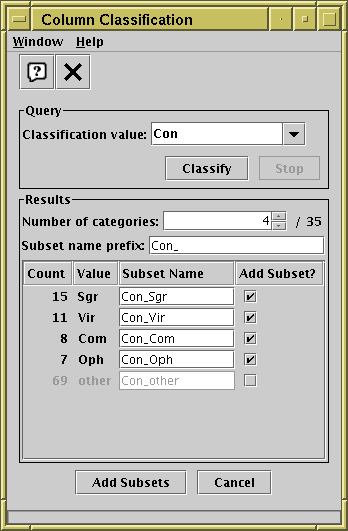

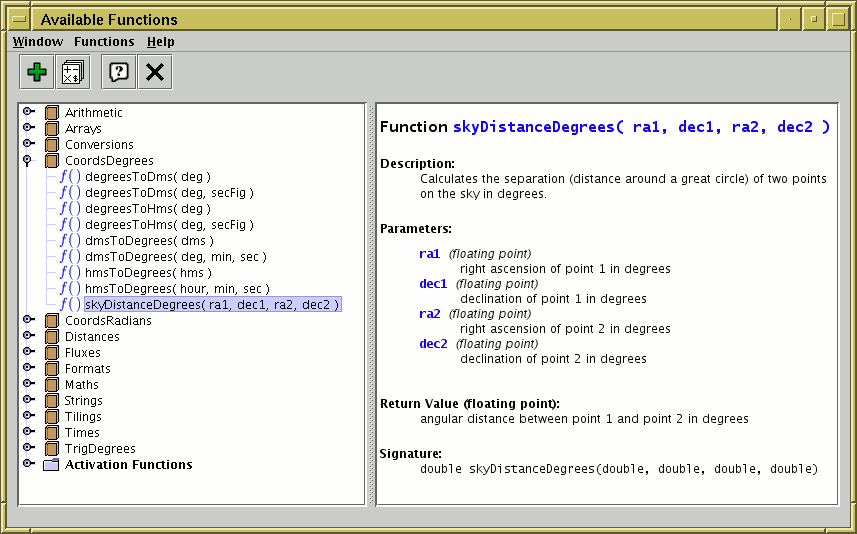

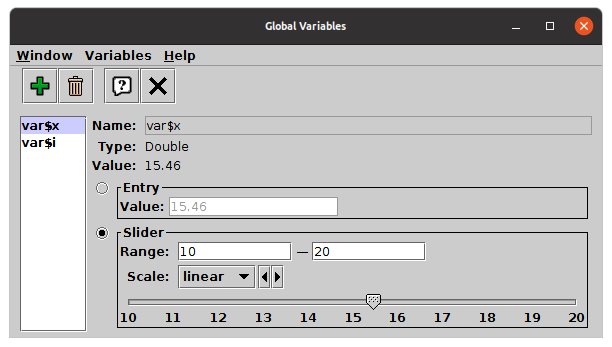

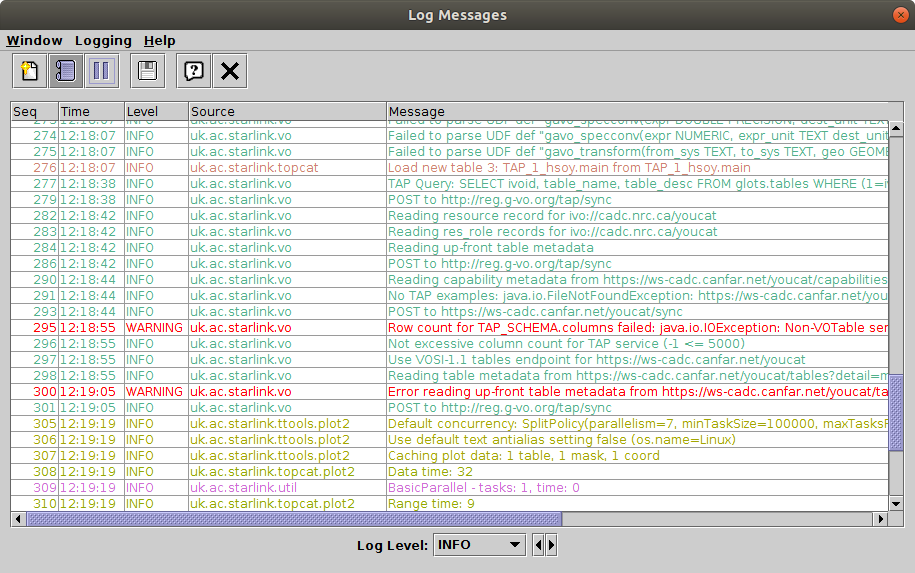

You can define a Row Subset in one of the following ways:

) button will pop up

the Algebraic Subset Window

which allows you to define a new subset using an algebraic expression

based on the values of the cells in each row.

The format of such expressions is described in Section 7.

The Subsets Window also provides some variants on this option

for convenience, like selecting

the first N (

) button will pop up

the Algebraic Subset Window

which allows you to define a new subset using an algebraic expression

based on the values of the cells in each row.

The format of such expressions is described in Section 7.

The Subsets Window also provides some variants on this option

for convenience, like selecting

the first N ( ),

last N (

),

last N ( ),

or every Nth (

),

or every Nth ( ) rows,

or the complement (

) rows,

or the complement ( ) of an existing subset.

) of an existing subset.

),

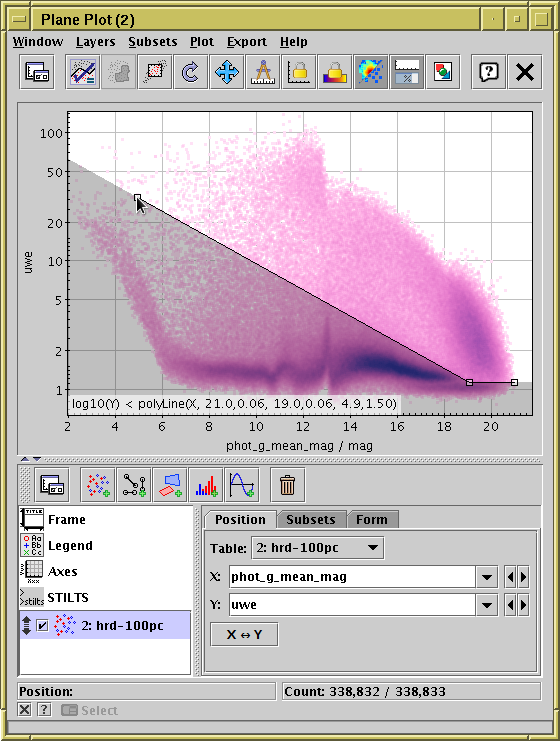

Algebraic Subset From Visible (

),

Algebraic Subset From Visible ( ),

Draw Subset Blob (

),

Draw Subset Blob ( ) and

Draw Algebraic Subset (

) and

Draw Algebraic Subset ( ),

though not all are available for all plot types.

),

though not all are available for all plot types.

) or

Subset From Unselected Rows (

) or

Subset From Unselected Rows ( )

buttons to create a new subset based

on the set of highlighted rows or their complement.

Combining this with sorting

the rows in the table can be useful;

if you do a Sort Up on a given column and then drag out the

top few rows of the table you can easily create a subset consisting

of the highest values of a given column.

)

buttons to create a new subset based

on the set of highlighted rows or their complement.

Combining this with sorting

the rows in the table can be useful;

if you do a Sort Up on a given column and then drag out the

top few rows of the table you can easily create a subset consisting

of the highest values of a given column.

In all these cases you will be asked to assign a name for the subset. As with column names, it is a good idea to follow a few rules for these names so that they can be used in algebraic expressions. They should be:

In the first subset definition method above, the current subset will be set immediately to the newly created one. In other cases the new subset may be highlighted appropriately in other windows, for instance by being plotted in scatter plot windows.

You can sort the rows of each table according to the values in the table. Normally you will want to sort on a numeric column, but other values may be sortable too, for instance a String column will sort alphabetically. Some kinds of columns (e.g. array ones) don't have any well-defined order, and it is not possible to select these for sorting on.

At any time, each table has a current row order,

and this affects the Apparent Table.

You can always see what it is by looking under

the Sort Order item

in the Control Window when that table

is selected; by default it is blank, which means the rows have the

same order as that of the table they were loaded in from.

The little arrow ( /

/ ) indicates whether

the sense of the sort is up or down.

You can change the sort order by selecting a column name

or entering an algebraic expression

in this selector, and change the sense by clicking on the arrow.

To sort on multiple columns, use the Add/Remove Selector

(

) indicates whether

the sense of the sort is up or down.

You can change the sort order by selecting a column name

or entering an algebraic expression

in this selector, and change the sense by clicking on the arrow.

To sort on multiple columns, use the Add/Remove Selector

(

) buttons to change the number of selectors;

selectors to the left are more significant, and ones to the right

are used in case of a tie in earlier values.

The sort order can also be changed by using menu items in the

Columns Window or right-clicking

popup menus in the Data Window.

) buttons to change the number of selectors;

selectors to the left are more significant, and ones to the right

are used in case of a tie in earlier values.

The sort order can also be changed by using menu items in the

Columns Window or right-clicking

popup menus in the Data Window.

Selecting values to sort by calculates the new row order by performing a sort on the cell values there and then. If the table data change somehow (e.g. because you edit cells in the table) then it is possible for the sort order to become out of date. If that happens you can resort by making a new selection and then changing it back again.

The current row order affects the Apparent Table, and hence determines the order of rows in tables which are exported in any way (e.g. written out) from TOPCAT. You can always see the rows in their currently sorted order in the Data Window.

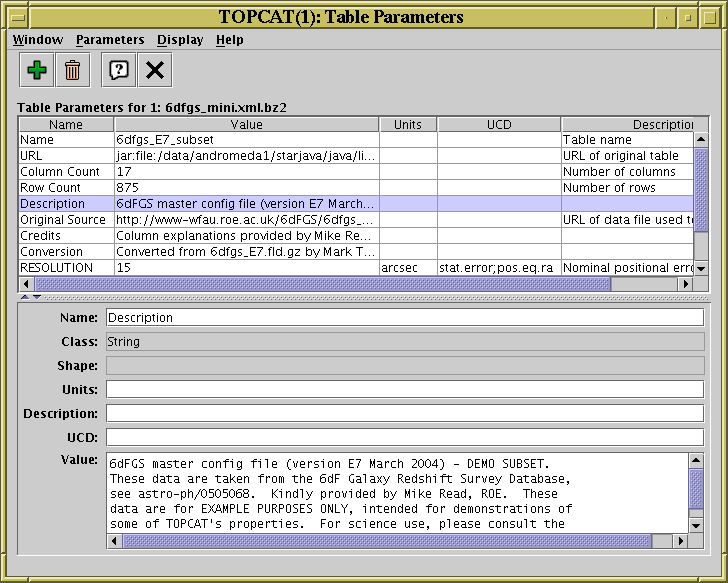

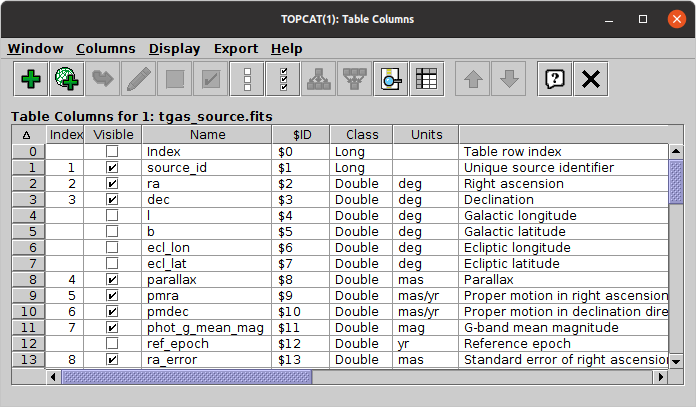

When each table is imported it has a list of columns. Each column has header information which determines the kind of data which can fill the cells of that column as well as a name, and maybe some additional information like units and Unified Content Descriptor. All this information can be viewed, and in some cases modified, in the Columns Window.

During the lifetime of the table within TOPCAT, this list of columns can be changed by adding new columns, hiding (and perhaps subsequently revealing) existing columns, and changing their order. The current state of which columns are present and visible and what order they are in is collectively known as the Column Set, and affects the Apparent Table. The current Column Set is always reflected in the columns displayed in the Data Window and Statistics Window. The Columns Window shows all the known columns, including hidden ones; whether they are currently visible is indicated by the checkbox in the Visible column. By default, the Columns Window and Statistics Window also reflect the current column set order, though there are options to change this view.

You can affect the current Column Set in the following ways:

) or

Reveal Selected (

) or

Reveal Selected ( )

button in the toolbar or menu.

Note when selecting rows, don't drag the mouse over the Visible

column, do it somewhere in the middle of the table.

The Hide All (

)

button in the toolbar or menu.

Note when selecting rows, don't drag the mouse over the Visible

column, do it somewhere in the middle of the table.

The Hide All ( ) and

Reveal All (

) and

Reveal All ( )

buttons set all columns in the table invisible or visible -

a useful convenience if you've got a very wide table.

)

buttons set all columns in the table invisible or visible -

a useful convenience if you've got a very wide table.

You can also hide a column by right-clicking on it in the Data Window, which brings up a popup menu - select the Hide option. To make it visible again you have to go to the Columns Window as above.

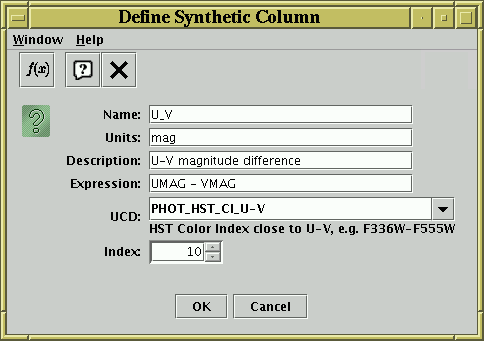

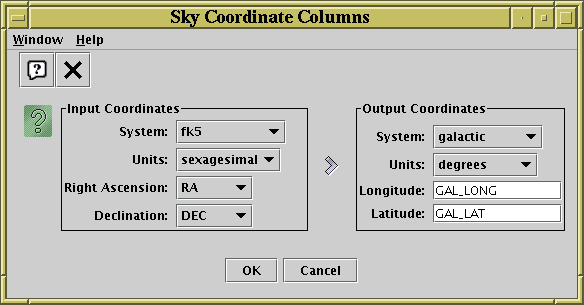

)

or New Sky Coordinate Columns (

)

or New Sky Coordinate Columns ( ) buttons in the

Columns Window or the (right-click) popup menu in the

Data Window to add new columns derived from exsiting ones.

) buttons in the

Columns Window or the (right-click) popup menu in the

Data Window to add new columns derived from exsiting ones.

) button.

This is similar to the Add a Synthetic Column

described in the previous item, but it pops up a new column

dialogue with similar characteristics (name, units etc)

to those of the column that's being replaced, and when completed

it slots the new column in to the table hiding the old one.

) button.

This is similar to the Add a Synthetic Column

described in the previous item, but it pops up a new column

dialogue with similar characteristics (name, units etc)

to those of the column that's being replaced, and when completed

it slots the new column in to the table hiding the old one.

) button, which will add

a new boolean column to the table with the value true

for rows part of that subset and false for the other rows.

) button, which will add

a new boolean column to the table with the value true

for rows part of that subset and false for the other rows.

Tables can be loaded into TOPCAT using the Load Window or from the command line, or acquired from VO services, and saved using the Save Window. This section describes the file formats supported for input and output, as well as the syntax to use when specifying a table by name, either as a file/URL or using a scheme specification.

TOPCAT supports a number of different serialization formats for table data; some have better facilities for storing table data and metadata than others.

Since you can load a table from one format and save it in a different

one, TOPCAT can be used to convert a table from one format to another.

If this is all you want to do however, you may find it more

convenient to use the tcopy or tpipe

command line utilities in the

STILTS package.

The following subsections describe the available formats for reading and writing tables. The two operations are separate, so not all the supported input formats have matching output formats and vice versa.

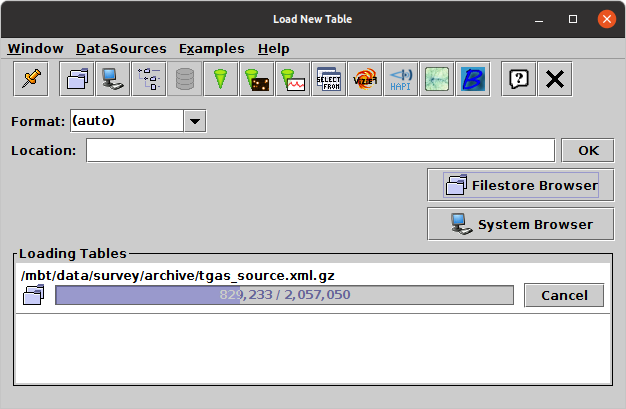

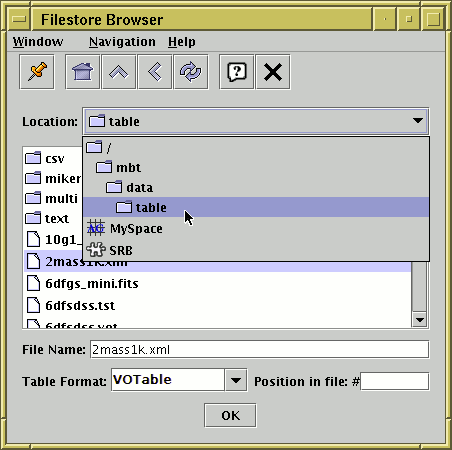

Loading table into TOPCAT from files or URLs is done either

using the Load Table dialogue

or one of its sub-windows,

or from the command line when you start the program.

For some file formats (e.g. FITS, VOTable, CDF),

the format can be automatically determined by

looking at the file content, regardless of filename;

for others (e.g. CSV files with a ".csv" extension),

TOPCAT may be able to use the filename as a hint to guess the format

(the details of these rules are given in the format-specific

subsections below).

In other cases though,

you will have to specify the format that the file is in.

In the Load Window, there is a selection box from which you can

choose the format, and from the command line you use the

-f flag - see Section 10 for details.

You can always specify the format rather than using automatic detection

if you prefer - this is slightly more efficient,

and may give you a more detailed error message

if a table fails to load.

In either case, table locations may be given as filenames or as URLs, and any data compression (gzip, unix compress and bzip2) will be automatically detected and dealt with - see Section 4.2.

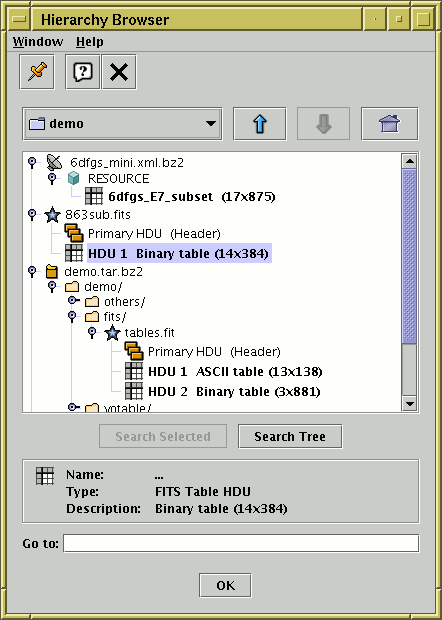

Some of the formats

(e.g. FITS, VOTable)

are capable of storing more than one table;

usually loading such files loads all the tables into TOPCAT.

If you want to specify only a single table,

you can give a position indicator either after a "#" sign

at the end of the filename or using the

Position in file field in the

Filestore Browser or similar.

The details of the syntax for this is given in the relevant

format description below.

The following sections describe the table formats which TOPCAT can read.

FITS is a very well-established format for storage of

astronomical table or image data

(see https://fits.gsfc.nasa.gov/).

This reader can read tables stored in

binary (XTENSION='BINTABLE') and

ASCII (XTENSION='TABLE') table extensions;

any image data is ignored.

Currently, binary table extensions are read much more efficiently

than ASCII ones.

When a table is stored in a BINTABLE extension in an uncompressed FITS file on disk, the table is 'mapped' into memory; this generally means very fast loading and low memory usage. FITS tables are thus usually efficient to use.

Limited support is provided for the semi-standard HEALPix-FITS convention; such information about HEALPix level and coordinate system is read and made available for application usage and user examination.

A private convention is used to support encoding of tables with more than 999 columns (not possible in standard FITS); see Section 4.1.3.2.

Header cards in the table's HDU header will be made available as table parameters. Only header cards which are not used to specify the table format itself are visible as parameters (e.g. NAXIS, TTYPE* etc cards are not). HISTORY and COMMENT cards are run together as one multi-line value.

Any 64-bit integer column with a non-zero integer offset

(TFORMn='K', TSCALn=1, TZEROn<>0)

is represented in the read table as Strings giving the decimal integer value,

since no numeric type in Java is capable of representing the whole range of

possible inputs. Such columns are most commonly seen representing

unsigned long values.

Where a multi-extension FITS file contains more than one table, a single table may be specified using the position indicator, which may take one of the following forms:

spec23.fits.gz"

with one primary HDU and two BINTABLE extensions,

you would view the first one using the name "spec23.fits.gz"

or "spec23.fits.gz#1"

and the second one using the name "spec23.fits.gz#2".

The suffix "#0" is never used for a legal

FITS file, since the primary HDU cannot contain a table.

EXTNAME header in the HDU,

or alternatively the value of EXTNAME

followed by "-" followed by the value of EXTVER.

This follows the recommendation in

the FITS standard that EXTNAME and EXTVER

headers can be used to identify an HDU.

So in a multi-extension FITS file "cat.fits"

where a table extension

has EXTNAME='UV_DATA' and EXTVER=3,

it could be referenced as

"cat.fits#UV_DATA" or "cat.fits#UV_DATA-3".

Matching of these names is case-insensitive.

Files in this format may contain multiple tables;

depending on the context, either one or all tables

will be read.

Where only one table is required,

either the first one in the file is used,

or the required one can be specified after the

"#" character at the end of the filename.

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading FITS tables, regardless of the filename.

There are actually two FITS input handlers,

fits-basic and fits-plus.

The fits-basic handler extracts standard column metadata

from FITS headers of the HDU in which the table is found,

while the fits-plus handler reads column and table metadata

from VOTable content stored in the primary HDU of the multi-extension

FITS file.

FITS-plus is a private convention effectively defined by the

corresponding output handler; it allows de/serialization of

much richer metadata than can be stored in standard FITS headers

when the FITS file is read by fits-plus-aware readers,

though other readers can understand the unenhanced FITS file perfectly well.

It is normally not necessary to worry about this distinction;

TOPCAT will determine whether a FITS file is FITS-plus or not based on its

content and use the appropriate handler, but if you want to force the

reader to use or ignore the enriched header, you can explicitly select

an input format of "FITS-plus" or "FITS".

The details of the FITS-plus convention are described in Section 4.1.3.1.

As well as normal binary and ASCII FITS tables, STIL supports

FITS files which contain tabular data stored in column-oriented format.

This means that the table is stored in a BINTABLE extension HDU,

but that BINTABLE has a single row, with each cell of that row

holding a whole column's worth of data. The final (slowest-varying)

dimension of each of these cells (declared via the TDIMn headers)

is the same for every column, namely,

the number of rows in the table that is represented.

The point of this is that all the cells for each column are stored

contiguously, which for very large, and especially very wide tables

means that certain access patterns (basically, ones which access

only a small proportion of the columns in a table) can be much more

efficient since they require less I/O overhead in reading data blocks.

Such tables are perfectly legal FITS files, but general-purpose FITS software may not recognise them as multi-row tables in the usual way. This format is mostly intended for the case where you have a large table in some other format (possibly the result of an SQL query) and you wish to cache it in a way which can be read efficiently by a STIL-based application.

For performance reasons, it is advisable to access colfits files uncompressed on disk. Reading them from a remote URL, or in gzipped form, may be rather slow (in earlier versions it was not supported at all).

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading colfits-basic tables, regardless of the filename.

Like the normal (row-oriented) FITS handler,

two variants are supported:

with (colfits-plus) or without (colfits-basic)

metadata stored as a VOTable byte array in the primary HDU.

For details of the FITS-plus convention, see Section 4.1.3.1.

VOTable is an XML-based format for tabular data endorsed by the International Virtual Observatory Alliance; while the tabular data which can be encoded is by design close to what FITS allows, it provides for much richer encoding of structure and metadata. Most of the table data exchanged by VO services is in VOTable format, and it can be used for local table storage as well.

Any table which conforms to the VOTable 1.0, 1.1, 1.2, 1.3 or 1.4 specifications can be read. This includes all the defined cell data serializations; cell data may be included in-line as XML elements (TABLEDATA serialization), included/referenced as a FITS table (FITS serialization), or included/referenced as a raw binary stream (BINARY or BINARY2 serialization). The handler does not attempt to be fussy about input VOTable documents, and it will have a good go at reading VOTables which violate the standards in various ways.

Much, but not all, of the metadata contained in a VOTable

document is retained when the table is read in.

The attributes

unit, ucd, xtype and utype,

and the elements

COOSYS, TIMESYS and DESCRIPTION

attached to table columns or parameters,

are read and may be used by the application as appropriate

or examined by the user.

However, information encoded in the hierarchical structure

of the VOTable document, including GROUP structure, is not

currently retained when a VOTable is read.

VOTable documents may contain more than one actual table

(TABLE element).

To specify a specific single table,

the table position indicator is given by the

zero-based index of the TABLE element in a breadth-first search.

Here is an example VOTable document:

<VOTABLE>

<RESOURCE>

<TABLE name="Star Catalogue"> ... </TABLE>

<TABLE name="Galaxy Catalogue"> ... </TABLE>

</RESOURCE>

</VOTABLE>

If this is available in a file named "cats.xml"

then the two tables could be named as

"cats.xml#0" and "cats.xml#1" respectively.

Files in this format may contain multiple tables;

depending on the context, either one or all tables

will be read.

Where only one table is required,

either the first one in the file is used,

or the required one can be specified after the

"#" character at the end of the filename.

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading VOTable tables, regardless of the filename.

NASA's Common Data Format, described at https://cdf.gsfc.nasa.gov/, is a binary format for storing self-described data. It is typically used to store tabular data for subject areas like space and solar physics.

CDF does not store tables as such, but sets of variables

(columns) which are typically linked to a time quantity;

there may be multiple such disjoint sets in a single CDF file.

This reader attempts to extract these sets into separate tables

using, where present, the DEPEND_0 attribute

defined by the

ISTP Metadata Guidelines.

Where there are multiple tables they can be identified

using a "#" symbol at the end of the filename

by index ("<file>.cdf#0" is the first table)

or by the name of the independent variable

("<file>.cdf#EPOCH" is the table relating to

the EPOCH column).

Files in this format may contain multiple tables;

depending on the context, either one or all tables

will be read.

Where only one table is required,

either the first one in the file is used,

or the required one can be specified after the

"#" character at the end of the filename.

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading CDF tables, regardless of the filename.

Comma-separated value ("CSV") format is a common semi-standard text-based format in which fields are delimited by commas. Spreadsheets and databases are often able to export data in some variant of it. The intention is to read tables in the version of the format spoken by MS Excel amongst other applications, though the documentation on which it was based was not obtained directly from Microsoft.

The rules for data which it understands are as follows:

#" character

(or anything else) to introduce "comment" lines.

Because the CSV format contains no metadata beyond column names,

the handler is forced to guess the datatype of the values in each column.

It does this by reading the whole file through once and guessing

on the basis of what it has seen (though see the maxSample

configuration option). This has the disadvantages:

The delimiter option makes it possible to use non-comma

characters to separate fields. Depending on the character used this

may behave in surprising ways; in particular for space-separated fields

the ascii format may be a better choice.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"csv(header=true,delimiter=|)".

The following options are available:

header = true|false|null

true: the first line is a header line containing column namesfalse: all lines are data lines, and column names will be assigned automaticallynull: a guess will be made about whether the first line is a header or not depending on what it looks likenull (auto-determination).

This usually works OK, but can get into trouble if

all the columns look like string values.

(Default: null)

delimiter = <char>|0xNN

|", a hexadecimal character code like "0x7C", or one of the names "comma", "space" or "tab". Some choices of delimiter, for instance whitespace characters, might not work well or might behave in surprising ways.

(Default: ,)

maxSample = <int>

0)

notypes = <type>[;<type>...]

blank, boolean, short, int, long, float, double, date, hms and dms. So if you want to make sure that all integer and floating-point columns are 64-bit (i.e. long and double respectively) you can set this value to "short;int;float".This format cannot be automatically identified

by its content, so in general it is necessary

to specify that a table is in

CSV

format when reading it.

However, if the input file has

the extension ".csv" (case insensitive)

an attempt will be made to read it using this format.

An example looks like this:

RECNO,SPECIES,NAME,LEGS,HEIGHT,MAMMAL 1,pig,Pigling Bland,4,0.8,true 2,cow,Daisy,4,2.0,true 3,goldfish,Dobbin,,0.05,false 4,ant,,6,0.001,false 5,ant,,6,0.001,false 6,queen ant,Ma'am,6,0.002,false 7,human,Mark,2,1.8,true

See also ECSV as a format which is similar and capable of storing more metadata.

The Enhanced Character Separated Values format was developed within the Astropy project and is described in Astropy APE6 (DOI). It is composed of a YAML header followed by a CSV-like body, and is intended to be a human-readable and maybe even human-writable format with rich metadata. Most of the useful per-column and per-table metadata is preserved when de/serializing to this format. The version supported by this reader is currently ECSV 1.0.

There are various ways to format the YAML header, but a simple example of an ECSV file looks like this:

# %ECSV 1.0

# ---

# delimiter: ','

# datatype: [

# { name: index, datatype: int32 },

# { name: Species, datatype: string },

# { name: Name, datatype: string },

# { name: Legs, datatype: int32 },

# { name: Height, datatype: float64, unit: m },

# { name: Mammal, datatype: bool },

# ]

index,Species,Name,Legs,Height,Mammal

1,pig,Bland,4,,True

2,cow,Daisy,4,2,True

3,goldfish,Dobbin,,0.05,False

4,ant,,6,0.001,False

5,ant,,6,0.001,False

6,human,Mark,2,1.9,True

If you follow this pattern, it's possible to write your own ECSV files by

taking an existing CSV file

and decorating it with a header that gives column datatypes,

and possibly other metadata such as units.

This allows you to force the datatype of given columns

(the CSV reader guesses datatype based on content, but can get it wrong)

and it can also be read much more efficiently than a CSV file

and its format can be detected automatically.

The header information can be provided either in the ECSV file itself,

or alongside a plain CSV file from a separate source

referenced using the header configuration option.

In Gaia EDR3 for instance, the ECSV headers are supplied alongside

the CSV files available for raw download of all tables in the

Gaia source catalogue, so e.g. STILTS can read

one of the gaia_source CSV files with full metadata

as follows:

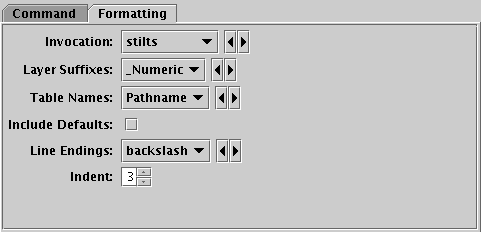

stilts tpipe

ifmt='ecsv(header=http://cdn.gea.esac.esa.int/Gaia/gedr3/ECSV_headers/gaia_source.header)'

in=http://cdn.gea.esac.esa.int/Gaia/gedr3/gaia_source/GaiaSource_000000-003111.csv.gz

The ECSV datatypes that work well with this reader are

bool,

int8, int16, int32, int64,

float32, float64

and

string.

Array-valued columns are also supported with some restrictions.

Following the ECSV 1.0 specification,

columns representing arrays of the supported datatypes can be read,

as columns with datatype: string and a suitable

subtype, e.g.

"int32[<dims>]" or "float64[<dims>]".

Fixed-length arrays (e.g. subtype: int32[3,10])

and 1-dimensional variable-length arrays

(e.g. subtype: float64[null]) are supported;

however variable-length arrays with more than one dimension

(e.g. subtype: int32[4,null]) cannot be represented,

and are read in as string values.

Null elements of array-valued cells are not supported;

they are read as NaNs for floating point data, and as zero/false for

integer/boolean data.

ECSV 1.0, required to work with array-valued columns,

is supported by Astropy v4.3 and later.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"ecsv(header=http://cdn.gea.esac.esa.int/Gaia/gedr3/ECSV_headers/gaia_source.header,colcheck=FAIL)".

The following options are available:

header = <filename-or-url>

null)

colcheck = IGNORE|WARN|FAIL

WARN)

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading ECSV tables, regardless of the filename.

In many cases tables are stored in some sort of unstructured plain text format, with cells separated by spaces or some other delimiters. There is a wide variety of such formats depending on what delimiters are used, how columns are identified, whether blank values are permitted and so on. It is impossible to cope with them all, but the ASCII handler attempts to make a good guess about how to interpret a given ASCII file as a table, which in many cases is successful. In particular, if you just have columns of numbers separated by something that looks like spaces, you should be just fine.

Here are the detailed rules for how the ASCII-format tables are interpreted:

null" (unquoted) represents

the null valueBoolean,

Short

Integer,

Long,

Float,

Double,

String

NaN for not-a-number, which is treated the same as a

null value for most purposes, and Infinity or inf

for infinity (with or without a preceding +/- sign).

These values are matched case-insensitively.If the list of rules above looks frightening, don't worry, in many cases it ought to make sense of a table without you having to read the small print. Here is an example of a suitable ASCII-format table:

#

# Here is a list of some animals.

#

# RECNO SPECIES NAME LEGS HEIGHT/m

1 pig "Pigling Bland" 4 0.8

2 cow Daisy 4 2

3 goldfish Dobbin "" 0.05

4 ant "" 6 0.001

5 ant "" 6 0.001

6 ant '' 6 0.001

7 "queen ant" 'Ma\'am' 6 2e-3

8 human "Mark" 2 1.8

In this case it will identify the following columns:

Name Type

---- ----

RECNO Short

SPECIES String

NAME String

LEGS Short

HEIGHT/m Float

It will also use the text "Here is a list of some animals"

as the Description parameter of the table.

Without any of the comment lines, it would still interpret the table,

but the columns would be given the names col1..col5.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"ascii(maxSample=100000,notypes=short;float)".

The following options are available:

maxSample = <int>

0)

notypes = <type>[;<type>...]

blank, boolean, short, int, long, float, double, date, hms and dms. So if you want to make sure that all integer and floating-point columns are 64-bit (i.e. long and double respectively) you can set this value to "short;int;float".This format cannot be automatically identified

by its content, so in general it is necessary

to specify that a table is in

ASCII

format when reading it.

However, if the input file has

the extension ".txt" (case insensitive)

an attempt will be made to read it using this format.

CalTech's Infrared Processing and Analysis Center use a text-based format for storage of tabular data, defined at http://irsa.ipac.caltech.edu/applications/DDGEN/Doc/ipac_tbl.html. Tables can store column name, type, units and null values, as well as table parameters.

This format cannot be automatically identified

by its content, so in general it is necessary

to specify that a table is in

IPAC

format when reading it.

However, if the input file has

the extension ".tbl" or ".ipac" (case insensitive)

an attempt will be made to read it using this format.

An example looks like this:

\Table name = "animals.vot" \Description = "Some animals" \Author = "Mark Taylor" | RECNO | SPECIES | NAME | LEGS | HEIGHT | MAMMAL | | int | char | char | int | double | char | | | | | | m | | | null | null | null | null | null | null | 1 pig Pigling Bland 4 0.8 true 2 cow Daisy 4 2.0 true 3 goldfish Dobbin null 0.05 false 4 ant null 6 0.001 false 5 ant null 6 0.001 false 6 queen ant Ma'am 6 0.002 false 7 human Mark 2 1.8 true

NASA's Planetary Data System version 4 format is described at https://pds.nasa.gov/datastandards/. This implementation is based on v1.16.0 of PDS4.

PDS4 files consist of an XML Label file which

provides detailed metadata, and which may also contain references

to external data files stored alongside it.

This input handler looks for (binary, character or delimited)

tables in the Label;

depending on the configuration it may restrict them to those

in the File_Area_Observational area.

The Label is the file which has to be presented to this

input handler to read the table data.

Because of the relationship between the label and the data files,

it is usually necessary to move them around together.

If there are multiple tables in the label,

you can refer to an individual one using the "#"

specifier after the label file name by table name,

local_identifier, or 1-based index

(e.g. "label.xml#1" refers to the first table).

If there are Special_Constants defined

in the label, they are in most cases interpreted as blank values

in the output table data.

At present, the following special values are interpreted

as blanks:

saturated_constant,

missing_constant,

error_constant,

invalid_constant,

unknown_constant,

not_applicable_constant,

high_instrument_saturation,

high_representation_saturation,

low_instrument_saturation,

low_representation_saturation

.

Fields within top-level Groups are interpreted as array values. Any fields in nested groups are ignored. For these array values only limited null-value substitution can be done (since array elements are primitives and so cannot take null values).

This input handler is somewhat experimental, and the author is not a PDS expert. If it behaves strangely or you have suggestions for how it could work better, please contact the author.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"pds4(observational=true,checkmagic=false)".

The following options are available:

observational = true|false

<File_Area_Observational> element

of the PDS4 label should be included.

If true, only observational tables are found,

if false, other tables will be found as well.

(Default: false)

checkmagic = true|false

true)

Files in this format may contain multiple tables;

depending on the context, either one or all tables

will be read.

Where only one table is required,

either the first one in the file is used,

or the required one can be specified after the

"#" character at the end of the filename.

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading PDS4 tables, regardless of the filename.

The so-called "Machine-Readable Table" format is used by AAS journals, and based on the format of readMe files used by the CDS. There is some documentation at https://journals.aas.org/mrt-standards/, which mostly builds on documentation at http://vizier.u-strasbg.fr/doc/catstd.htx, but the format is in fact quite poorly specified, so this input handler was largely developed on a best-efforts basis by looking at MRT tables actually in use by AAS, and with assistance from AAS staff. As such, it's not guaranteed to succeed in reading all MRT files out there, but it will try its best.

It only attempts to read MRT files themselves, there is currently no capability to read VizieR data tables which provide the header and formatted data in separate files; however, if a table is present in VizieR, there will be options to download it in more widely used formats that can be used instead.

An example looks like this:

Title: A search for multi-planet systems with TESS using a Bayesian

N-body retrieval and machine learning

Author: Pearson K.A.

Table: Stellar Parameters

================================================================================

Byte-by-byte Description of file: ajab4e1ct2_mrt.txt

--------------------------------------------------------------------------------

Bytes Format Units Label Explanations

--------------------------------------------------------------------------------

1- 9 I9 --- ID TESS Input Catalog identifier

11- 15 F5.2 mag Tmag Apparent TESS band magnitude

17- 21 F5.3 solRad R* Stellar radius

23- 26 I4 K Teff Effective temperature

28- 32 F5.3 [cm/s2] log(g) log surface gravity

34- 38 F5.2 [Sun] [Fe/H] Metallicity

40- 44 F5.3 --- u1 Linear Limb Darkening

46- 50 F5.3 --- u2 Quadratic Limb Darkening

--------------------------------------------------------------------------------

231663901 12.35 0.860 5600 4.489 0.00 0.439 0.138

149603524 9.72 1.280 6280 4.321 0.24 0.409 0.140

336732616 11.46 1.400 6351 4.229 0.00 0.398 0.140

231670397 9.85 2.070 6036 3.934 0.00 0.438 0.117

...

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"mrt(checkmagic=false,usefloat=true)".

The following options are available:

checkmagic = true|false

Title: ".

Setting this true is generally a good idea

to avoid attempting to parse non-MRT files,

but you can set it false to attempt to read an MRT file

that starts with the wrong sequence.

(Default: true)

usefloat = true|false

If it is set true,

then encountering values outside the representable range

will be reported in accordance with the current ErrorMode.

(Default: false)

errmode = IGNORE|WARN|FAIL

warn)

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading MRT tables, regardless of the filename.

Parquet is a columnar format developed within the Apache project. Data is compressed on disk and read into memory before use. The file format is described at https://github.com/apache/parquet-format. This software is written with reference to version 2.10.0 of the format.

This input handler will read columns representing scalars, strings and one-dimensional arrays of the same. It is not capable of reading multi-dimensional arrays, more complex nested data structures, or some more exotic data types like 96-bit integers. If such columns are encountered in an input file, a warning will be emitted through the logging system and the column will not appear in the read table. Support may be introduced for some additional types if there is demand.

Parquet files typically do not contain rich metadata

such as column units, descriptions, UCDs etc.

To remedy that, this reader supports the

VOParquet convention (version 1.0),

in which metadata is recorded in a DATA-less VOTable

stored in the parquet file header.

If such metadata is present it will by default be used,

though this can be controlled using the votmeta

configuration option below.

Depending on the way that the table is accessed, the reader tries to take advantage of the column and row block structure of parquet files to read the data in parallel where possible.

Note:

The parquet I/O handlers require large external libraries, which are not always bundled with the library/application software because of their size. In some configurations, parquet support may not be present, and attempts to read or write parquet files will result in a message like:Parquet-mr libraries not availableIf you can supply the relevant libaries on the classpath at runtime, the parquet support will work. At time of writing, the required libraries are included in thetopcat-extra.jarmonolithic jar file (though nottopcat-full.jar), and are included if you have thetopcat-all.dmgfile. They can also be found in the starjava github repository (https://github.com/Starlink/starjava/tree/master/parquet/src/lib or you can acquire them from the Parquet MR package. These arrangements may be revised in future releases, for instance if parquet usage becomes more mainstream. The required dependencies are a minimal subset of those required by the Parquet MR submoduleparquet-cliat version 1.13.1, in particular the filesaircompressor-0.21.jarcommons-collections-3.2.2.jarcommons-configuration2-2.1.1.jarcommons-lang3-3.9.jarfailureaccess-1.0.1.jarguava-27.0.1-jre.jarhadoop-auth-3.2.3.jarhadoop-common-3.2.3.jarhadoop-mapreduce-client-core-3.2.3.jarhtrace-core4-4.1.0-incubating.jarparquet-cli-1.13.1.jarparquet-column-1.13.1.jarparquet-common-1.13.1.jarparquet-encoding-1.13.1.jarparquet-format-structures-1.13.1.jarparquet-hadoop-1.13.1.jarparquet-jackson-1.13.1.jarslf4j-api-1.7.22.jarslf4j-nop-1.7.22.jarsnappy-java-1.1.8.3.jarstax2-api-4.2.1.jarwoodstox-core-5.3.0.jarzstd-jni-1.5.0-1.jar.

These libraries support some, but not all, of the compression formats defined for parquet, currentlyuncompressed,gzip,snappy,zstdandlz4_raw. Supplying more of the parquet-mr dependencies at runtime would extend this list. Unlike the rest of TOPCAT/STILTS/STIL which is written in pure java, some of these libraries (currently the snappy and zstd compression codecs) contain native code, which means they may not work on all architectures. At time of writing all common architectures are covered, but there is the possibility of failure with ajava.lang.UnsatisfiedLinkErroron other platforms if attempting to read/write files that use those compression algorithms.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"parquet(cachecols=true,nThread=4)".

The following options are available:

cachecols = true|false|null

true, then when the table is loaded,

all data is read by column into local scratch disk files,

which is generally the fastest way to ingest all the data.

If false, the table rows are read as required,

and possibly cached using the normal STIL mechanisms.

If null (the default), the decision is taken

automatically based on available information.

(Default: null)

nThread = <int>

cachecols option.

If the value is <=0 (the default), a value is chosen

based on the number of apparently available processors.

(Default: 0)

tryUrl = true|false

false)

votmeta = true|false|null

IVOA.VOTable-Parquet.content

will be read to supply the metadata for the input table,

following the

VOParquet convention.

If false, any such VOTable metadata is ignored.

If set null, the default, then such VOTable metadata

will be used only if it is present and apparently consistent

with the parquet data and metadata.

(Default: null)

votable = <filename-or-url>

votmeta

configuration is not false.

(Default: null)

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading parquet tables, regardless of the filename.

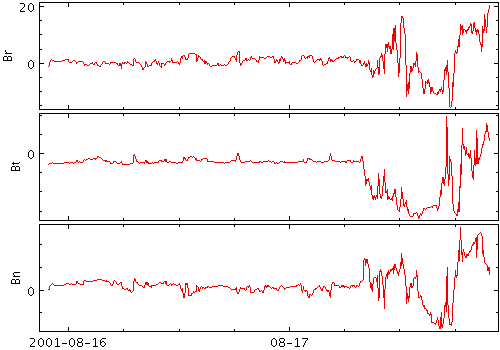

HAPI, the

Heliophysics Data Application Programmer's Interface

is a protocol for serving streamed time series data.

This reader can read HAPI CSV and binary tables

if they include header information

(the include=header request parameter

must be present).

An example HAPI URL is

https://vires.services/hapi/data?dataset=GRACE_A_MAG&start=2009-01-01&stop=2009-01-02&include=header

While HAPI data is normally accessed directly from the service, it is possible to download a HAPI stream to a local file and use this handler to read it from disk.

This format cannot be automatically identified

by its content, so in general it is necessary

to specify that a table is in

HAPI

format when reading it.

However, if the input file has

the extension ".hapi" (case insensitive)

an attempt will be made to read it using this format.

The Feather file format is a column-oriented binary disk-based format based on Apache Arrow and supported by (at least) Python, R and Julia. Some description of it is available at https://github.com/wesm/feather and https://blog.rstudio.com/2016/03/29/feather/. It can be used for large datasets, but it does not support array-valued columns. It can be a useful format to use for exchanging data with R, for which FITS I/O is reported to be slow.

At present CATEGORY type columns are not supported, and metadata associated with TIME, DATE and TIMESTAMP columns is not retrieved.

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading feather tables, regardless of the filename.

GBIN format is a special-interest file format used within DPAC, the Data Processing and Analysis Consortium working on data from the Gaia astrometry satellite. It is based on java serialization, and in all of its various forms has the peculiarity that you only stand any chance of decoding it if you have the Gaia data model classes on your java classpath at runtime. Since the set of relevant classes is very large, and also depends on what version of the data model your GBIN file corresponds to, those classes will not be packaged with this software, so some additional setup is required to read GBIN files.

As well as the data model classes, you must provide on the runtime classpath the GaiaTools classes required for GBIN reading. The table input handler accesses these by reflection, to avoid an additional large library dependency for a rather niche requirement. It is likely that since you have to supply the required data model classes you will also have the required GaiaTools classes to hand as well, so this shouldn't constitute much of an additional burden for usage.

In practice, if you have a jar file or files for pretty much any

java library or application which is capable of reading a given

GBIN file, just adding it or them to the classpath at runtime

when using this input handler ought to do the trick.

Examples of such jar files are

the

MDBExplorerStandalone.jar

file available from

https://gaia.esac.esa.int/mdbexp/,

or the gbcat.jar file you can build from the

CU9/software/gbcat/

directory in the DPAC subversion repository.

The GBIN format doesn't really store tables, it stores arrays of java objects, so the input handler has to make some decisions about how to flatten these into table rows.

In its simplest form, the handler basically looks for public instance

methods of the form getXxx()

and uses the Xxx as column names.

If the corresponding values are themselves objects with suitable getter

methods, those objects are added as new columns instead.

This more or less follows the practice of the gbcat

(gaia.cu1.tools.util.GbinInterogator) tool.

Method names are sorted alphabetically.

Arrays of complex objects are not handled well,

and various other things may trip it up.

See the source code (e.g. uk.ac.starlink.gbin.GbinTableProfile)

for more details.

If the object types stored in the GBIN file are known to the

special metadata-bearing class

gaia.cu9.tools.documentationexport.MetadataReader

and its dependencies, and if that class is on the runtime classpath,

then the handler will be able to extract additional metadata as available,

including standardised column names,

table and column descriptions, and UCDs.

An example of a jar file containing this metadata class alongside

data model classes is GaiaDataLibs-18.3.1-r515078.jar.

Note however at time of writing there are some deficiencies with this

metadata extraction functionality related to unresolved issues

in the upstream gaia class libraries and the relevant

interface control document

(GAIA-C9-SP-UB-XL-034-01, "External Data Centres ICD").

Currently columns appear in the output table in a more or less

random order, units and Utypes are not extracted,

and using the GBIN reader tends to cause a 700kbyte file "temp.xml"

to be written in the current directory.

If the upstream issues are fixed, this behaviour may improve.

Note: support for GBIN files is somewhat experimental. Please contact the author (who is not a GBIN expert) if it doesn't seem to be working properly or you think it should do things differently.

Note: there is a known bug in some versions of

GaiaTools (caused by a bug in its dependency library zStd-jni)

which in rare cases can fail to read all the rows in a GBIN input file.

If this bug is encountered by the reader, it will by default

fail with an error mentioning zStd-jni.

In this case, the best thing to do is to put a fixed version of zStd-jni

or GaiaTools on the classpath.

However, if instead you set the config option readMeta=false

the read will complete without error, though the missing rows will not

be recovered.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"gbin(hierarchicalNames=true,readMeta=false)".

The following options are available:

hierarchicalNames = true|false

Astrometry_Alpha", if false they may just be called "Alpha".

In case of name duplication however, the hierarchical form is always used.

(Default: false)

readMeta = true|false

Setting this false can prevent failing on an error related to a broken version of the zStd-jni library in GaiaTools.

Note however that in this case the data read, though not reporting an error, will silently be missing some rows from the GBIN file.

(Default: true)

This format can be automatically identified by its content so you do not need to specify the format explicitly when reading GBIN tables, regardless of the filename.

Example:

Suppose you have the MDBExplorerStandalone.jar file

containing the data model classes, you can read GBIN files by

starting TOPCAT like this:

topcat -classpath MDBExplorerStandalone.jar ...or like this:

java -classpath topcat-full.jar:MDBExplorerStandalone.jar uk.ac.starlink.topcat.Driver ...

Tab-Separated Table, or TST, is a text-based table format used

by a number of astronomical tools including Starlink's

GAIA

and

ESO's SkyCat

on which it is based.

A definition of the format can be found in

Starlink Software Note 75.

The implementation here ignores all comment lines: special comments

such as the "#column-units:" are not processed.

An example looks like this:

Simple TST example; stellar photometry catalogue.

A.C. Davenhall (Edinburgh) 26/7/00.

Catalogue of U,B,V colours.

UBV photometry from Mount Pumpkin Observatory,

see Sage, Rosemary and Thyme (1988).

# Start of parameter definitions.

EQUINOX: J2000.0

EPOCH: J1996.35

id_col: -1

ra_col: 0

dec_col: 1

# End of parameter definitions.

ra<tab>dec<tab>V<tab>B_V<tab>U_B

--<tab>---<tab>-<tab>---<tab>---

5:09:08.7<tab> -8:45:15<tab> 4.27<tab> -0.19<tab> -0.90

5:07:50.9<tab> -5:05:11<tab> 2.79<tab> +0.13<tab> +0.10

5:01:26.3<tab> -7:10:26<tab> 4.81<tab> -0.19<tab> -0.74

5:17:36.3<tab> -6:50:40<tab> 3.60<tab> -0.11<tab> -0.47

[EOD]

This format cannot be automatically identified by its content, so in general it is necessary to specify that a table is in TST format when reading it.

Some support is provided for files produced by the World Data Centre for Solar Terrestrial Physics. The format itself apparently has no name, but files in this format look something like the following:

Column formats and units - (Fixed format columns which are single space separated.)

------------------------

Datetime (YYYY mm dd HHMMSS) %4d %2d %2d %6d -

%1s

aa index - 3-HOURLY (Provisional) %3d nT

2000 01 01 000000 67

2000 01 01 030000 32

...

Support for this (obsolete?) format may not be very complete or robust.

This format cannot be automatically identified by its content, so in general it is necessary to specify that a table is in WDC format when reading it.

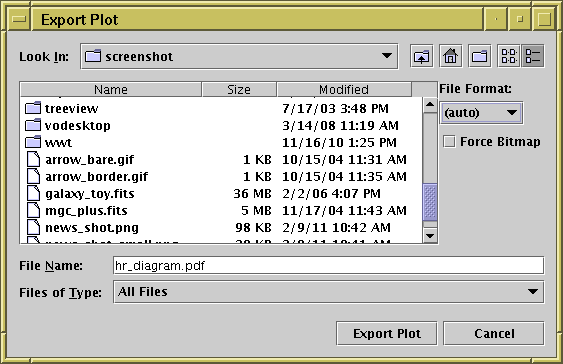

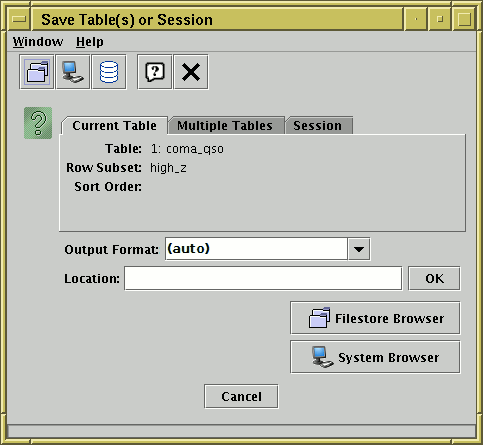

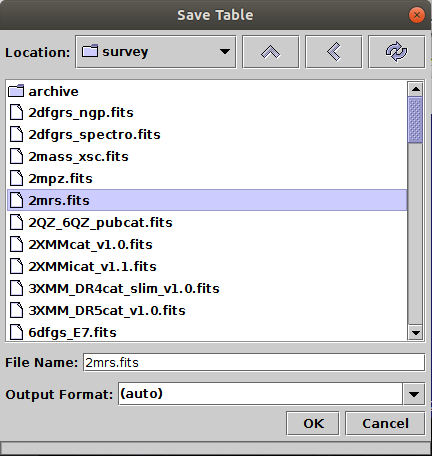

Writing out tables from TOPCAT is done using the Save Table Window. In general you have to specify the format in which you want the table to be output by selecting from the Save Window's Table Output Format selector; the following sections describe the possible choices. In some cases there are variants within each format, also described. If you use the default (auto) output format, TOPCAT will try to guess the format based on the filename you provide; the rules for that are described below as well.

The program has no "native" file format, but if you have no particular preference about which format to save tables to, FITS format is a good choice. Uncompressed FITS tables do not in most cases have to be read all the way through (they are 'mapped' into memory), which makes them very fast to load up. The FITS format which is written by default (also known as "FITS-plus") also uses a trick to store extra metadata, such as table parameters and UCDs in a way TOPCAT can read in again later. These files are quite usable as normal FITS tables by other applications, but they will only be able to see the limited metadata stored in the FITS headers. For very large files, in some circumstances the Column-Oriented FITS variant (colfits) can be more efficient, though this is unlikely to be understood except by STIL-based code (TOPCAT and STILTS). The FITS output handler with its options and variants is documented in Section 4.1.2.1. If you want to write to a format which retains all metadata in a portable format, then one of the VOTable formats might be better.

FITS is a very well-established format for storage of astronomical table or image data (see https://fits.gsfc.nasa.gov/). This writer stores tables in BINTABLE extensions of a FITS file.

There are a number of variations in exactly how the table data is

written to FITS.

These can be configured with name=value options in brackets

as described below, but for most purposes this isn't required;

you can just choose fits or one of the standard

aliases for commonly-used combinations like colfits

or fits-basic.

In all cases the output from this handler is legal FITS, but some non-standard conventions are used:

primary=votable option or fits-plus alias

(if you don't want it,

use primary=basic or fits-basic).

This convention is described in more detail in Section 4.1.3.1.

col=true option or the colfits-plus/colfits-basic

aliases.

If you write to a file with the ".colfits" extension

it is used by default.

For convenience, and compatibility with earlier versions, these standard aliases are provided:

fits or fits(primary=votable).

fits(primary=basic).

fits(primary=basic,var=true).

fits(col=true).

fits(col=true,primary=basic).

fits-basic,

but it will rearrange and rename columns as required to follow

the convention, and it will fail if the table does not contain

the required HEALPix metadata (STIL_HPX_* parameters).

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"fits(primary=basic,col=false)".

The following options are available:

primary = basic|votable[n.n]|none

basic

votable[n.n]

[n.n]" part

is optional, but if included

(e.g. "votable1.5")

indicates the version of the VOTable format to use.

none

votable)

col = true|false

false)

var = FALSE|TRUE|P|Q

True stores variable-length array values

after the main part of the table in the heap,

while false stores all arrays as fixed-length

(with a length equal to that of the longest array

in the column) in the body of the table.The options P or Q can be used

to force 32-bit or 64-bit pointers for indexing into the heap,

but it's not usually necessary since a suitable choice

is otherwise made from the data.

(Default: FALSE)

date = true|false

true)

Multiple tables may be written to a single output file using this format.

If no output format is explicitly chosen,

writing to a filename with

the extension ".fits", ".fit" or ".fts" (case insensitive)

will select fits format for output.

VOTable is an XML-based format for tabular data endorsed by the International Virtual Observatory Alliance and defined in the VOTable Recommendation. While the tabular data which can be encoded is by design close to what FITS allows, it provides for much richer encoding of structure and metadata. Most of the table data exchanged by VO services is in VOTable format, but it can be used for local table storage as well.

When a table is saved to VOTable format, a document conforming to the

VOTable specification containing a single TABLE element within

a single RESOURCE element is written.

Where the table contains such information

(often obtained by reading an input VOTable),

column and table metadata will be written out as appropriate to

the attributes

unit, ucd, xtype and utype,

and the elements

COOSYS, TIMESYS and DESCRIPTION

attached to table columns or parameters.

There are various ways that a VOTable can be written;

by default the output serialization format is TABLEDATA

and the VOTable format version is 1.4, or a value controlled

by the votable.version system property.

However, configuration options are available to adjust these defaults.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"votable(format=BINARY2,version=V13)".

The following options are available:

format = TABLEDATA|BINARY|BINARY2|FITS

TABLEDATA)

version = V10|V11|V12|V13|V14|V15

V10" is version 1.0 etc.inline = true|false

true)

compact = true|false|null

null)

encoding = UTF-8|UTF-16|...

UTF-8)

date = true|false

true)

Multiple tables may be written to a single output file using this format.

If no output format is explicitly chosen,

writing to a filename with

the extension ".vot", ".votable" or ".xml" (case insensitive)

will select votable format for output.

Writes tables in the semi-standard Comma-Separated Values format. This does not preserve any metadata apart from column names, and is generally inefficient to read, but it can be useful for importing into certain external applications, such as some databases or spreadsheets.

By default, the first line is a header line giving the column names,

but this can be inhibited using the header=false

configuration option.

The delimiter option makes it possible to use non-comma

characters to separate fields. Depending on the character used this

may behave in surprising ways; in particular for space-separated fields

the ascii format may be a better choice.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"csv(header=true,delimiter=|)".

The following options are available:

header = true|false

true)

delimiter = <char>|0xNN

|", a hexadecimal character code like "0x7C", or one of the names "comma", "space" or "tab". Some choices of delimiter, for instance whitespace characters, might not work well or might behave in surprising ways.

(Default: ,)

maxCell = <int>

2147483647)

If no output format is explicitly chosen,

writing to a filename with

the extension ".csv" (case insensitive)

will select CSV format for output.

An example looks like this:

RECNO,SPECIES,NAME,LEGS,HEIGHT,MAMMAL 1,pig,Pigling Bland,4,0.8,true 2,cow,Daisy,4,2.0,true 3,goldfish,Dobbin,,0.05,false 4,ant,,6,0.001,false 5,ant,,6,0.001,false 6,queen ant,Ma'am,6,0.002,false 7,human,Mark,2,1.8,true

The Enhanced Character Separated Values format was developed within the Astropy project and is described in Astropy APE6 (DOI). It is composed of a YAML header followed by a CSV-like body, and is intended to be a human-readable and maybe even human-writable format with rich metadata. Most of the useful per-column and per-table metadata is preserved when de/serializing to this format. The version supported by this writer is currently ECSV 1.0.

ECSV allows either a space or a comma for delimiting values,

controlled by the delimiter configuration option.

If ecsv(delimiter=comma) is used, then removing

the YAML header will leave a CSV file that can be interpreted

by the CSV inputhandler or imported into other

CSV-capable applications.

Following the ECSV 1.0 specification, array-valued columns are supported. ECSV 1.0, required for working with array-valued columns, is supported by Astropy v4.3 and later.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"ecsv(delimiter=comma)".

The following options are available:

delimiter = comma|space

space" or "comma".If no output format is explicitly chosen,

writing to a filename with

the extension ".ecsv" (case insensitive)

will select ECSV format for output.

An example looks like this:

# %ECSV 1.0 # --- # datatype: # - # name: RECNO # datatype: int32 # - # name: SPECIES # datatype: string # - # name: NAME # datatype: string # description: How one should address the animal in public & private. # - # name: LEGS # datatype: int32 # meta: # utype: anatomy:limb # - # name: HEIGHT # datatype: float64 # unit: m # meta: # VOTable precision: 2 # - # name: MAMMAL # datatype: bool # meta: # name: animals.vot # Description: Some animals # Author: Mark Taylor RECNO SPECIES NAME LEGS HEIGHT MAMMAL 1 pig "Pigling Bland" 4 0.8 True 2 cow Daisy 4 2.0 True 3 goldfish Dobbin "" 0.05 False 4 ant "" 6 0.001 False 5 ant "" 6 0.001 False 6 "queen ant" Ma'am 6 0.002 False 7 human Mark 2 1.8 True

Writes to a simple plain-text format intended to be comprehensible by humans or machines.

The first line is a comment, starting with a "#" character,

naming the columns, and an attempt is made to line up data in columns

using spaces. No metadata apart from column names is written.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"ascii(maxCell=158,maxParam=160)".

The following options are available:

maxCell = <int>

158)

maxParam = <int>

160)

params = true|false

false)

sampledRows = <int>

0)

If no output format is explicitly chosen,

writing to a filename with

the extension ".txt" (case insensitive)

will select ascii format for output.

An example looks like this:

# RECNO SPECIES NAME LEGS HEIGHT MAMMAL 1 pig "Pigling Bland" 4 0.8 true 2 cow Daisy 4 2.0 true 3 goldfish Dobbin "" 0.05 false 4 ant "" 6 0.001 false 5 ant "" 6 0.001 false 6 "queen ant" "Ma\'am" 6 0.002 false 7 human Mark 2 1.8 true

Writes output in the format used by CalTech's Infrared Processing and Analysis Center, and defined at http://irsa.ipac.caltech.edu/applications/DDGEN/Doc/ipac_tbl.html. Column name, type, units and null values are written, as well as table parameters.

The handler behaviour may be modified by specifying

one or more comma-separated name=value configuration options

in parentheses after the handler name, e.g.

"ipac(maxCell=1000,maxParam=100000)".

The following options are available:

maxCell = <int>

1000)

maxParam = <int>

100000)

params = true|false

true)

sampledRows = <int>

0)

If no output format is explicitly chosen,

writing to a filename with

the extension ".tbl" or ".ipac" (case insensitive)

will select IPAC format for output.

An example looks like this:

\Table name = "animals.vot" \Description = "Some animals" \Author = "Mark Taylor" | RECNO | SPECIES | NAME | LEGS | HEIGHT | MAMMAL | | int | char | char | int | double | char | | | | | | m | | | null | null | null | null | null | null | 1 pig Pigling Bland 4 0.8 true 2 cow Daisy 4 2.0 true 3 goldfish Dobbin null 0.05 false 4 ant null 6 0.001 false 5 ant null 6 0.001 false 6 queen ant Ma'am 6 0.002 false 7 human Mark 2 1.8 true

Parquet is a columnar format developed within the Apache project. Data is compressed on disk and read into memory before use. The file format is described at https://github.com/apache/parquet-format. This software is written with reference to version 2.10.0 of the format.

The parquet file format itself defines only rather limited semantic metadata, so that there is no standard way to record column units, descriptions, UCDs etc. By default, additional metadata is written in the form of a DATA-less VOTable attached to the file footer, as described by the VOParquet convention. This additional metadata can then be retrieved by other VOParquet-aware software.

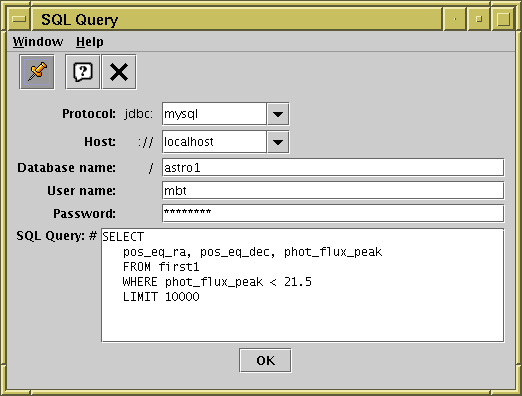

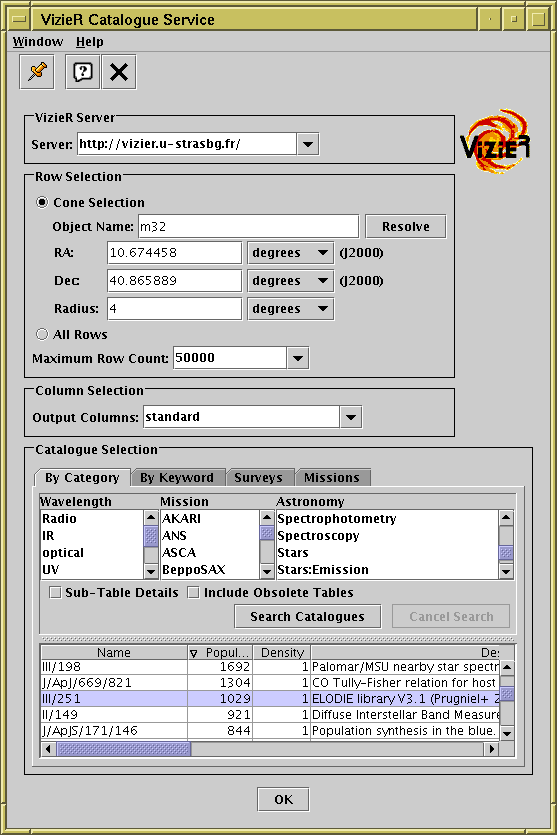

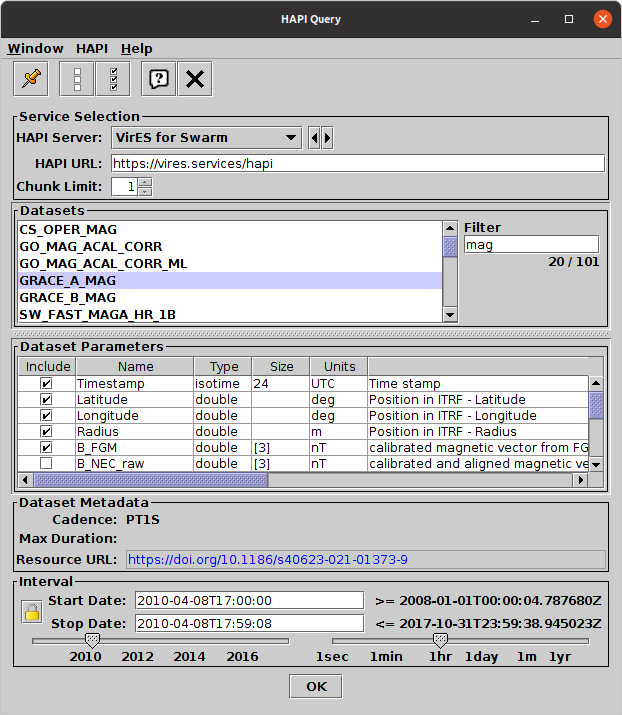

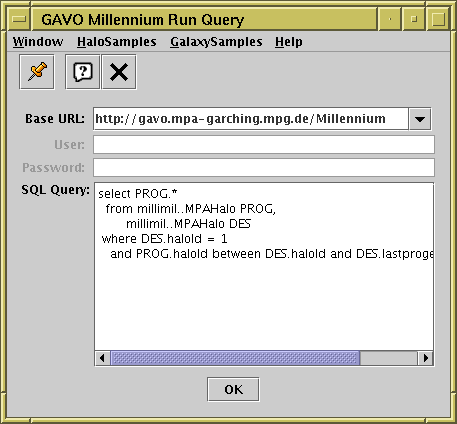

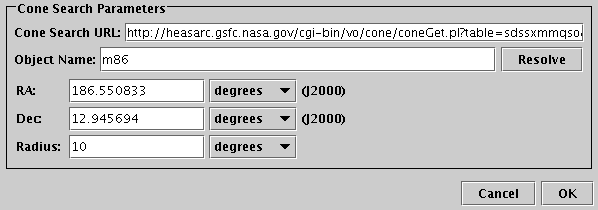

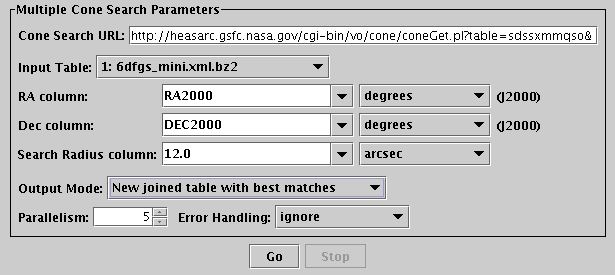

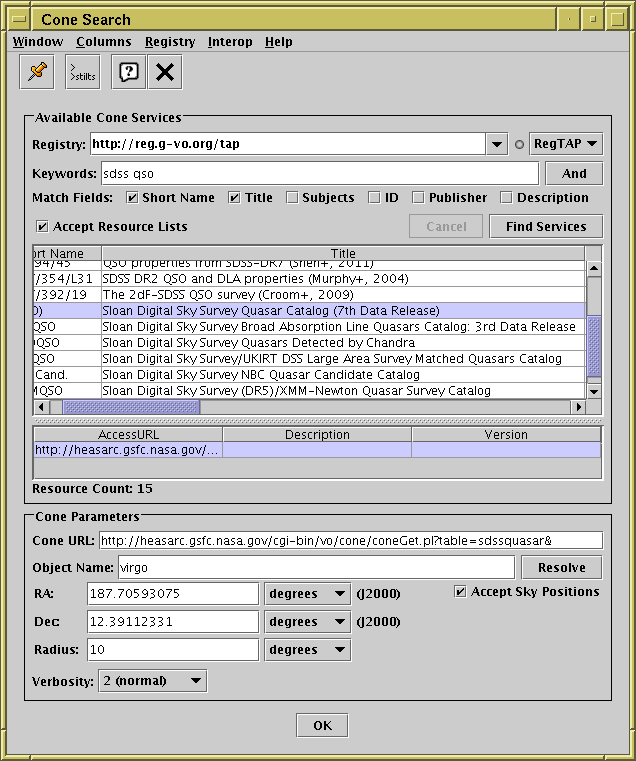

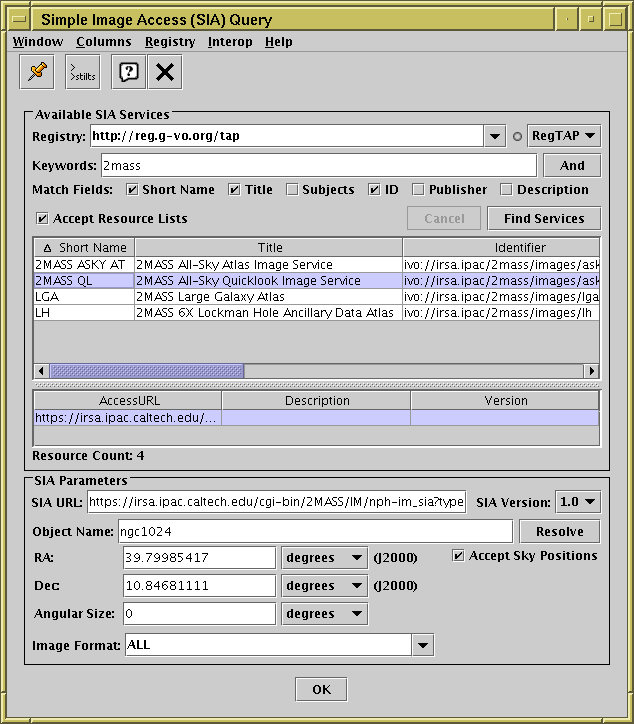

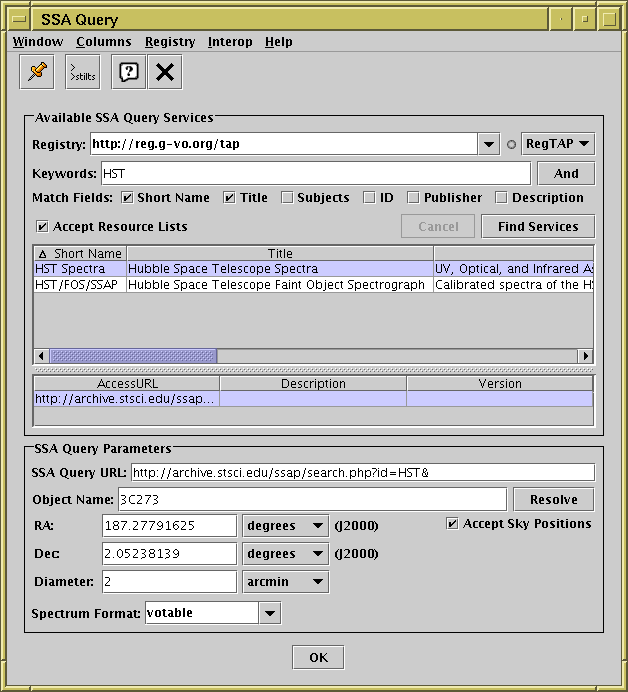

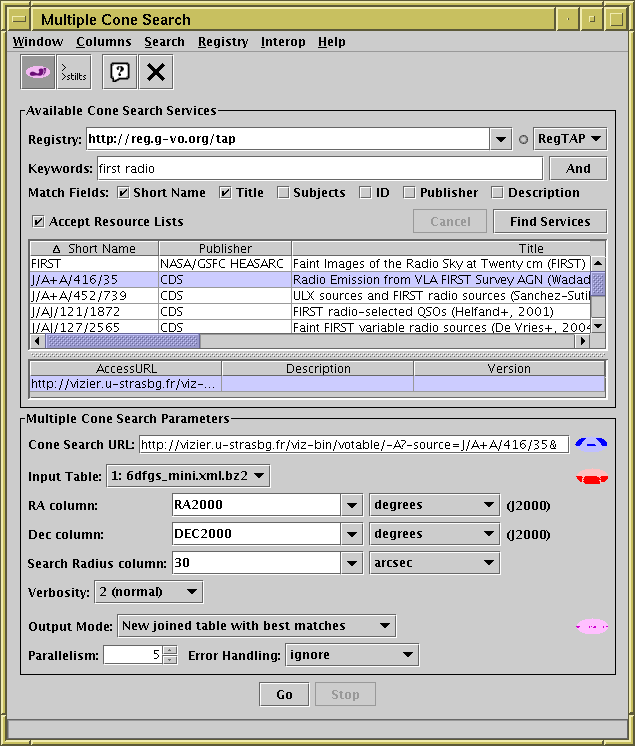

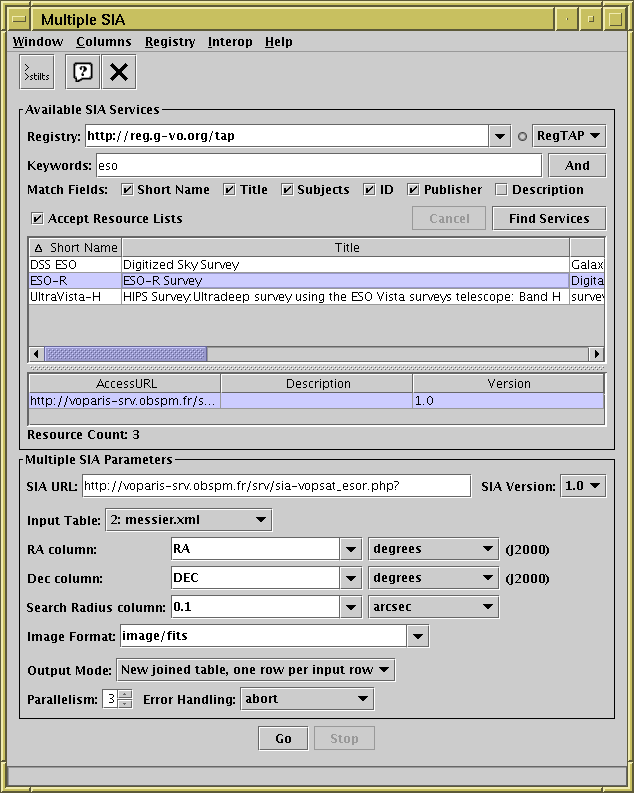

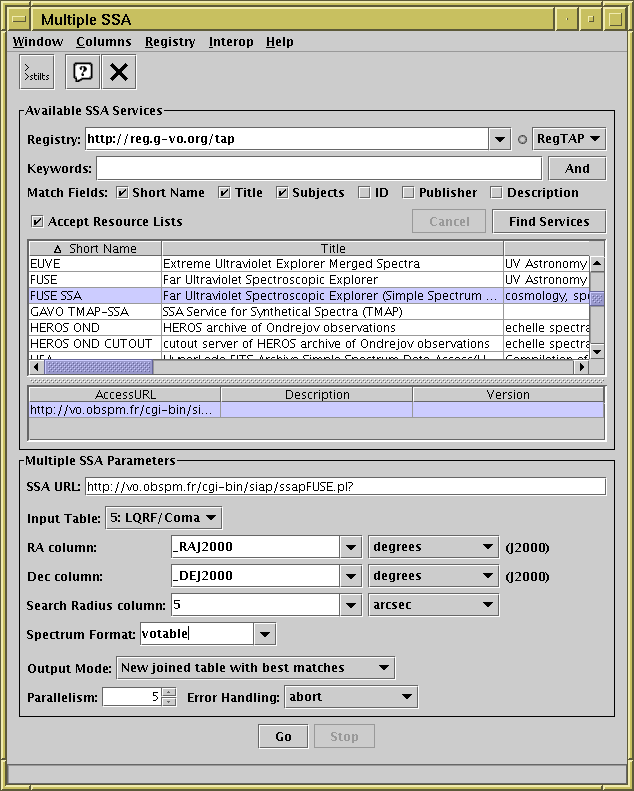

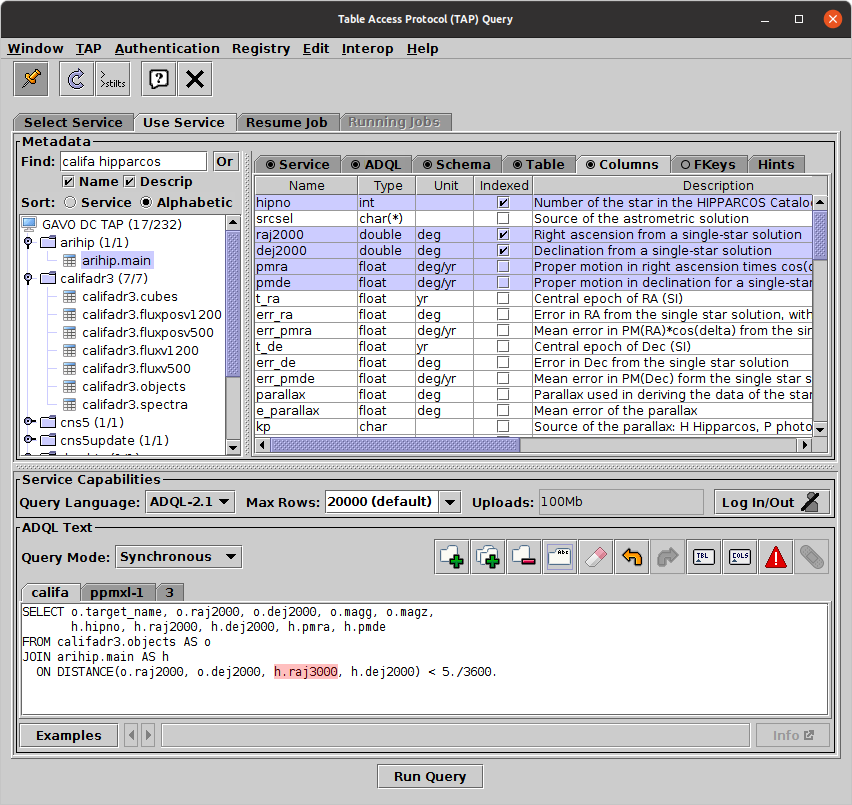

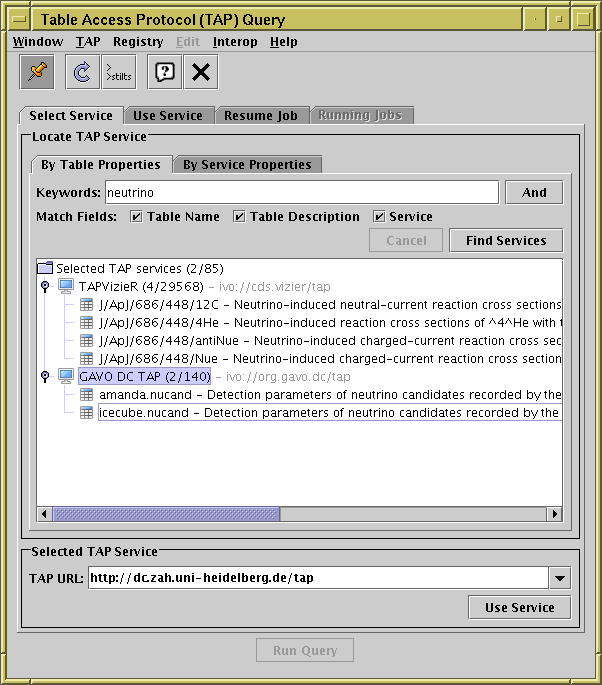

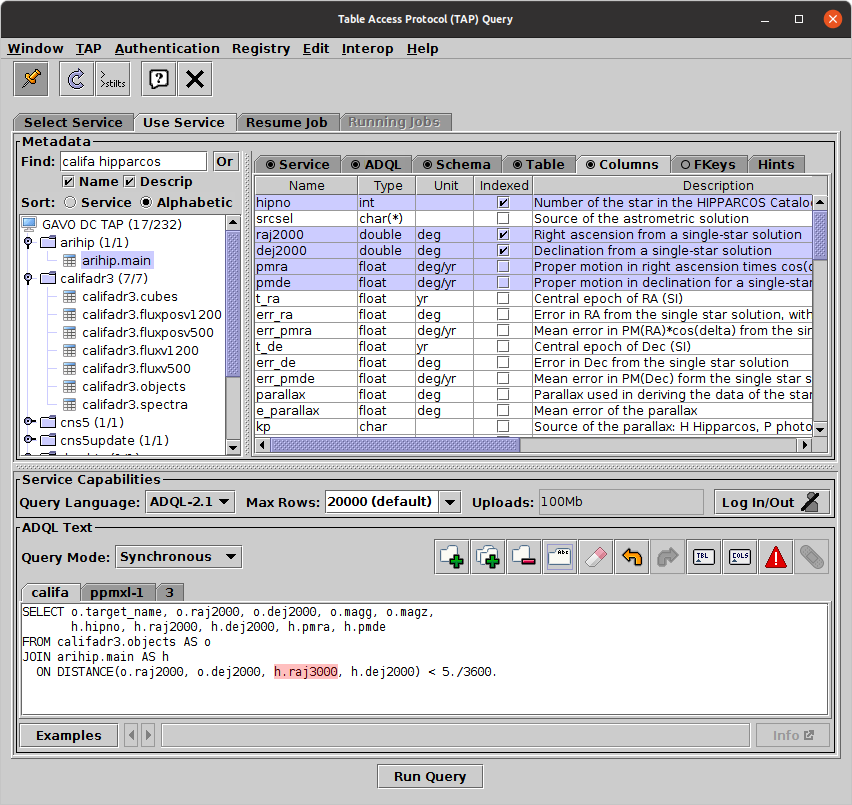

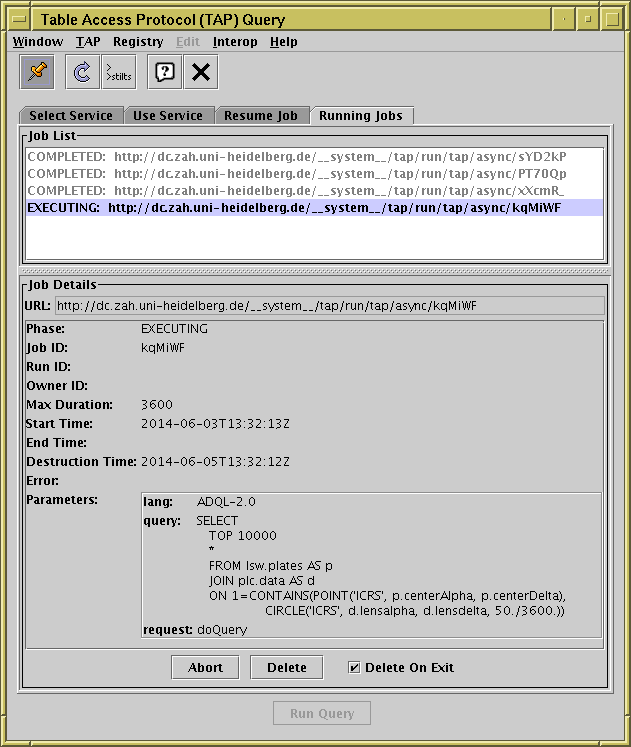

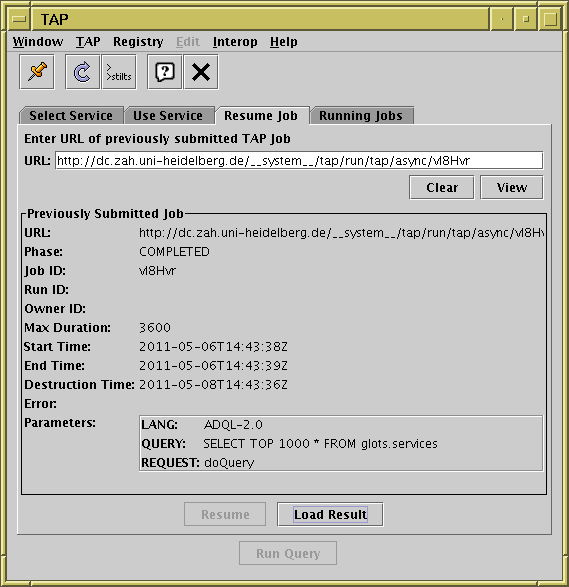

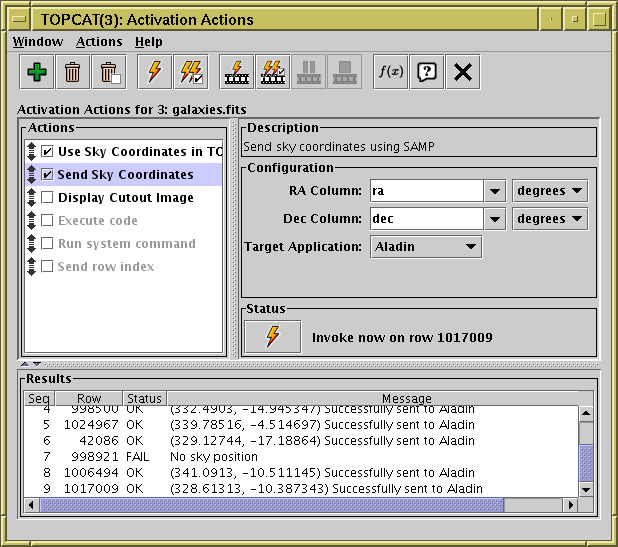

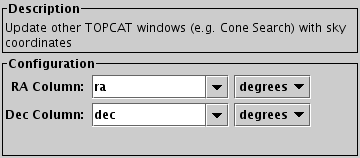

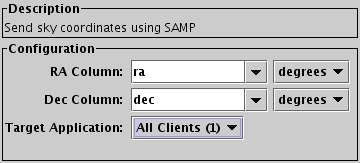

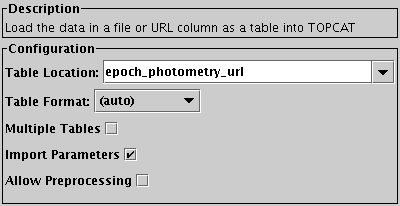

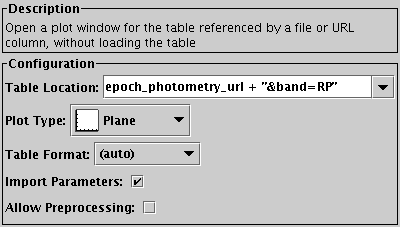

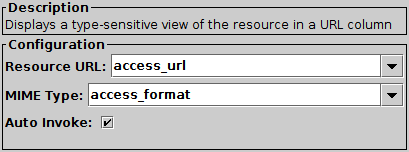

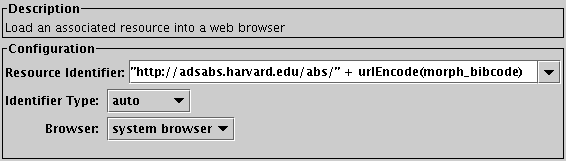

Note: